Machine Learning Operations is a set of processes to automate and accelerate the machine learning lifecycle to go from exploration, to experimentation to deploying machine learning model to the production environment.

History

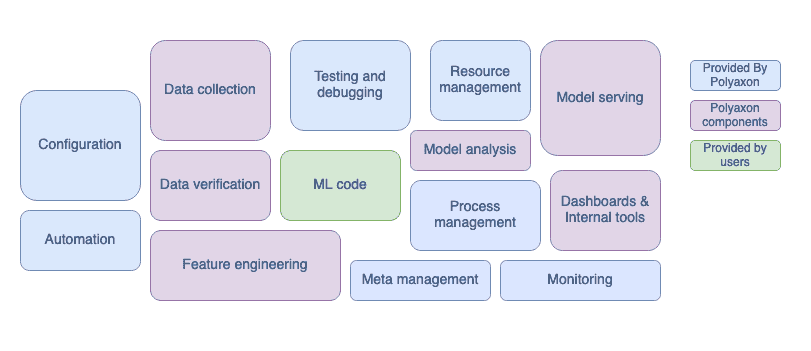

In 2015, a paper by Google engineers pointed out the challenges and incurring technical debt that arises when using ML models in production. The following diagram, taken from Hidden Technical Debt in Machine Learning Systems and adapted to how Polyaxon operates and abstracts the ML lifecycle, shows The required infrastructure for a real-world ML system:

The paper revealed that machine learning operations is no longer a discipline for data scientists. It is also relevant for any software engineering practitioner who faces challenges when deploying models in production, scaling them and implementing automated processes.

Given that some of the complexities and problems of ML systems can solved by adopting DevOps practices. MLOps evolved as the combination of DevOps best practices adapted to the machine learning complexities, and has gained a lot of traction and recognition in the past few years, especially that more and more companies are incorporating machine learning in their systems and face similar challenges.

Why do you need MLOps?

Creating a machine learning model that can generate prediction based on an input by a single individual data-scientist for a toy project is easy. However, creating a machine learning model that is reliable, fast, accurate, and can be used by a large number of users is difficult, It requires:

- Data ingestion

- data versioning

- Data validation, monitoring and quality checks, often including the detection of changes in data distribution, anomaly detection, or sampling to ensure that the model is biased

- Data preprocessing and feature generation

- Model training and tuning, often including keeping track of parameters used to tweak the ML models.

- Model analysis including performance tracking, evaluation, fairness analysis

- Model validation, versioning, and release management

- Model deployment and monitoring which is very different from deploying and monitoring a traditional software or a web app

- Feedback and alerting based on real-world data

How MLOps help?

By enforcing certain processes and practices, MLOps can help:

- Reduce risks

- Streamline the development lifecycle

- Better collaboration between teams

- Increased reliability

- Better performance and scalability

- Security and visibility for time to market

What tools to use?

To solve MLOps challenges there are many options:

- Full-featured machine-learning platforms

- Single-purpose solutions

- In-house solutions based on custom code and open-source libraries

- Cloud providers with MLOps offering: VertexAI by Google Cloud, AzureML, and SageMaker by AWS

How Polyaxon fits in the MLOps lifecycle

Polyaxon comes with several features to streamline the machine learning lifecycle:

- Training reproducibility with advanced logging features for ML runs to keep track of datasets, code, configurations, metrics, and environments

- Orchestration to scale experimentation using powerful managed compute or on-premise clusters

- Efficient workflows with built-in scheduling and management capabilities to build and deploy with continuous integration/continuous deployment (CI/CD)

- Advanced capabilities to meet governance and control objectives and promote component, model and artifact versions

Polyaxon promotes best practices by providing interfaces that encourages:

- Collaboration: Abstractions to highlight the work of organization members and teams while enforcing access to environments, datasets, connections, and resources.

- Reproducibility: A packaging format, tracking features, and lineage provides scientists the auditing and reproducibility layer to make every model portable, repeatable and reproducible, and a history and knowledge center to query, filter, sort and extract insights about anything that went into the system.

- Continuity: Streamlined process to go from model training to testing environments, to production deployments and continuously retraining the model.

- Monitoring: Integration with systems to monitor performance, staleness, and drift.

Polyaxon’s core is open-source and built on open standards:

- Rest API: Polyaxon at its core is a self-consuming RESTful JSON API with decoupled clients and front-end, We provide lots of tooling to improve data scientists work, but at the end of the day it’s Just JSON️, everything in the platform can be accessed through an open API.

- Open standards: Polyaxon is built on top of open standards and technologies, containers for packagin, Kubernetes for orchestration and open REST APIs makes it easy and safe to extend the platform. Data scientists can choose the software environment to run their experiments on using the any language, framework or library.

- Open source: Polyaxon code engine is open source, the CLI is open-source, Polyaxon agent is open-source, out tracking module is open0source, you can integrate and in the best case add the missing features.