Challenge of data-science projects

Data science is a challenging field, and it’s easy to get lost in the weeds when you’re assembling your team and planning your project. But there are some core steps that can be used to plan any data science project.

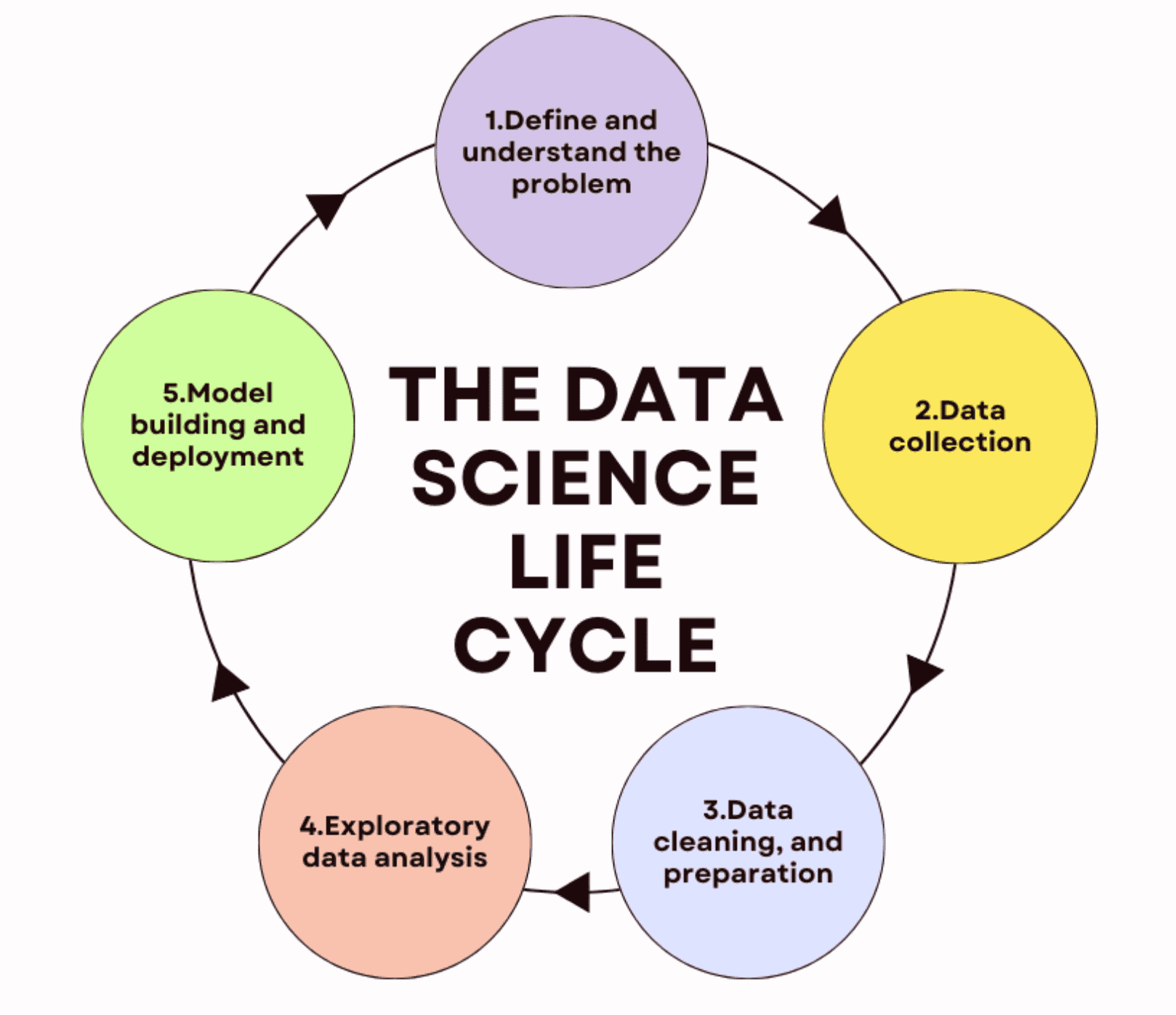

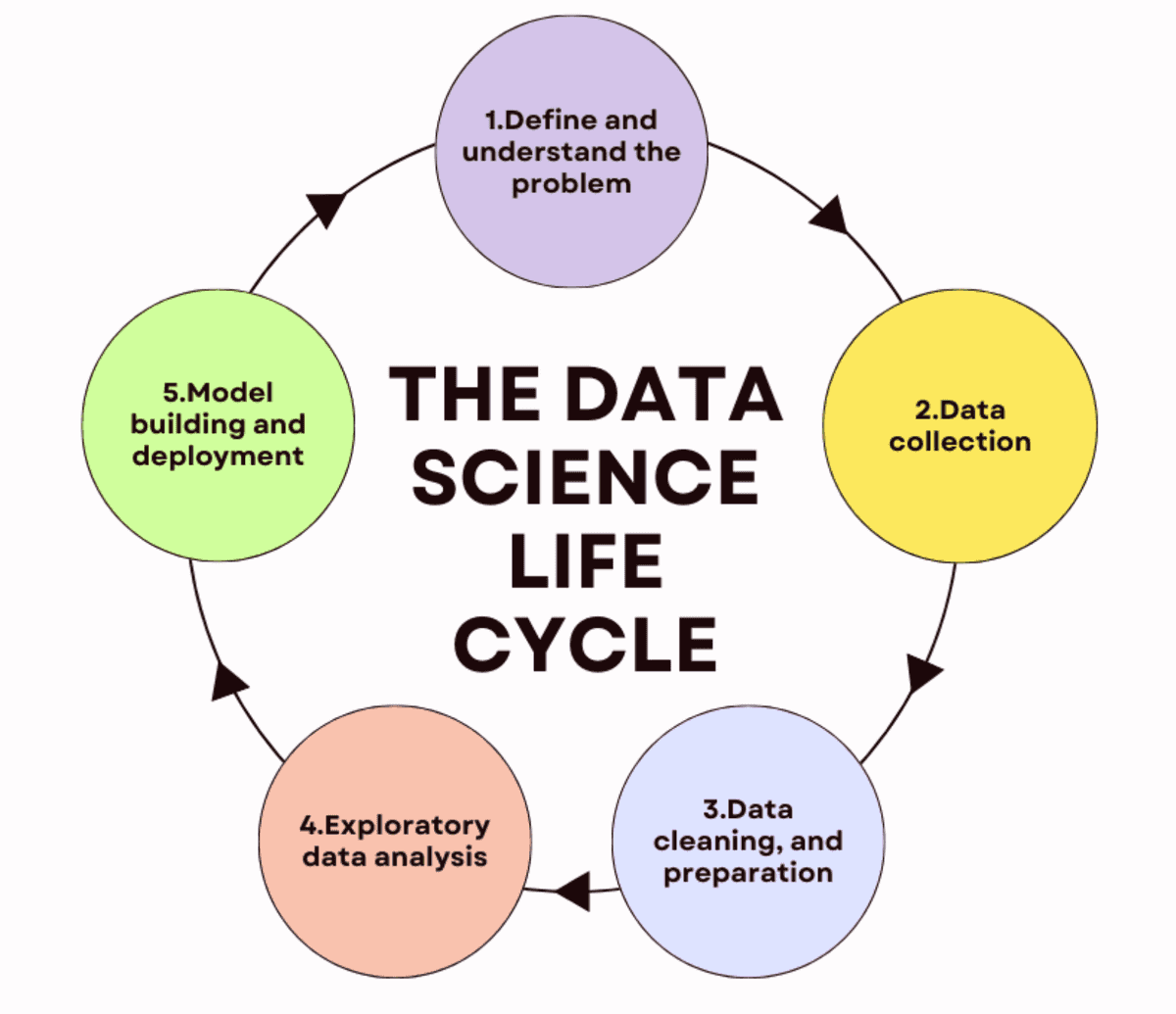

The data science life cycle

It’s important to first note that the data science lifecycle may look a little different to everyone. There are a few different interpretations, although they all generally resemble the following structure:

Get clarity on what you’re trying to achieve

The first step in any project should be understanding the goal of the project. What do you want to accomplish? What are you trying to measure? What questions do you want to answer? The answers to these questions will guide the rest of your work and determine how much time and effort it takes. If you don’t know what the goal is, then it’s impossible to know whether you’ve succeeded or failed, which makes it hard to use data science as part of an ongoing business process.

This is where most projects go off the rails. The goal of a data science project should be clear, specific and measurable. If it’s not, then you may have lost your way before you even started!

In addition to being clear about what you are trying to do, you also need to understand why you are doing it. What’s the underlying business problem? Is this something that will matter in six months’ time or a year from now? Why do we care about this problem? Can we measure its impact? What are we willing to do in order to solve it?

Collaboration

Data science and MLOps involve a wide range of stakeholders, including data scientists, ML engineers, IT professionals, and business stakeholders. Effective collaboration between these stakeholders is key to ensuring that projects are aligned with business goals and that data science and ML workflows are efficient and effective.

Data collection

Data scientists often assume that all data is good data — but that’s not always true! You want clean, high-quality datasets that are relevant for solving your particular problem and give you interesting insights along the way (not just numbers). Data quality varies widely between industries and companies; so make sure that you know exactly what kind of data you are collecting.

Once you understand what kind of information you need, this step is easy: collect it! You might need to gather data from multiple sources (e.g., logs from different servers), combine data from different sources (e.g., event logs with customer profiles), or apply some form of processing (e.g., extracting features from raw text). In some cases, this step may involve writing code yourself or using tools like SQL queries; in others, it may involve using existing APIs or third-party services.

Exploratory data analysis

Exploratory data analysis is a process of examining data to identify patterns and draw insights. The goal of exploratory data analysis is to gain an understanding of the data and its characteristics so that one can design a well-informed experiment.

The basic idea behind exploratory data analysis is that you can spot interesting patterns in your data by simply looking at it. You don’t need to use any special software or pre-defined algorithms, though some tools might help you with this process.

Analysis tools and techniques are used to understand the properties of your data, including how it’s distributed, what types of anomalies exist, or how many missing values there are. For example, when looking at stock market data, one might look at histograms or box plots to understand how stock prices are distributed over time. Another way to visualize this would be a scatter plot showing how many stocks were traded against their price. Visualizations are useful in exploring the structure and patterns in your data set before doing any deeper analysis on it.

Model building

Once the data is prepared, it is time to split it into train, validation, and test sets that will be used to develop the machine learning models. In order to build a robust model, data scientists need to try different approaches and see which one works best. They need to figure out what hyperparameters are important and which ones aren’t. Data scientists are also encouraged to use an experimentation platform like Polyaxon to track their experimentation and streamline their daily workflow.

Model deployment, monitoring and optimization

Once a model is built, it will then be deployed into the production environment. A deployed model might need to scale, but it would need to be monitored to detect bias and any drift that happens when data changes. The ongoing monitoring of data science and ML workflows can help to identify and address issues in a timely manner, while ongoing optimization efforts can help to improve the efficiency and effectiveness of these workflows over time.