As we are preparing for a GA v1 of Polyaxon, We thought about sharing what we have been working on during the last 4 months at Polyaxon. The v1 release delivers some new functionalities and features to streamline the machine learning lifecycle.

In this blog post, we will describe briefly some of the new features and improvements, and over the following weeks we will be sharing more blog posts to dive further into each one of the new features in more detail.

Polyaxon is a Machine Learning (ML) and Deep Learning (DL) automation platform.

TL.DR.

- New Initializers: data, git, dockerfiles, containers, custom connections.

- New Automatic collection of job’s outputs and artifacts.

- New Support for injecting sidecars.

- New Polyflow (Support for several workflows): jobs, services, parallel strategies, hyper-parameters tuning strategies, directed acyclic graph(DAGs) strategies, distributed strategies, scheduling strategies, early stopping strategies (metric & status).

- Improvement Polytune (previously experiment groups): Improved and customizable hyper-parameters tuning algorithms.

- Improvement Polyaxon for Kubeflow.

- New Polyaxon for TFX.

- New Polyaxon for Ray (alpha).

- New Polyaxon for Dask (alpha).

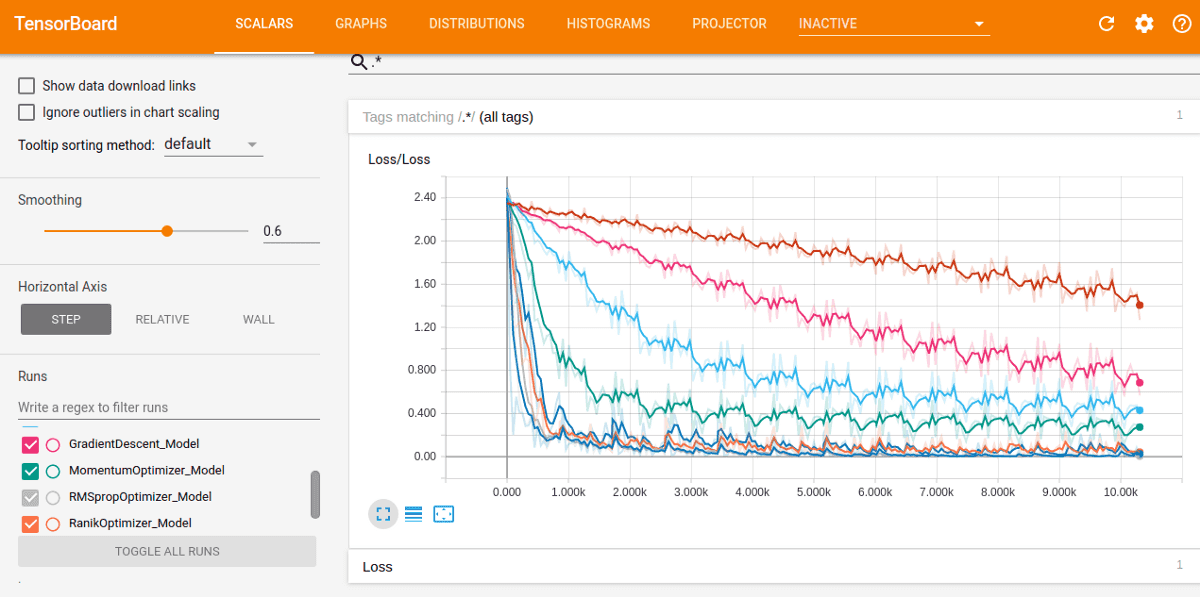

- Improvement/New Open source driven visualization: Tensorboard, Jupyter Notebook, JupyterLab, Apache Zeppelin, Plotly Dash, Streamlit, Voila, custom visualizations with bokeh/plotly, custom dashboard based on your favorite languages/tools.

- New Possibility to deploy models as internal tools.

- New Hub: highly productive and reusable components for data processing, ML training, visualizations, …

- Improvement Tracking: metrics, images, graphs, audios, videos, html, text.

- Improvement Build step is not required to start experiments/runs.

- Improvement Support for the full spec of Kubernetes container specification.

- Improvement PQL: Polyaxon’s query language, improved and slightly different syntax to be used uniformly for querying data, dashboarding, setting condition in pipelines, setting watchdogs for drift management.

- Improvement Operator v1beta1.

- Improvement hyper-parameters tuning scale to 0.

- Improvement Polyaxon streams: better handling and storage of logs, metrics, and events.

- New Polyaxon agent open-source.

- New Polyaxon Cloud (previsouly PaaS): a self-serve hybrid platform where you get to keep 100% of your code, data, models, logs, metrics, and connections private and on your own on-prem or cloud cluster, with possibility to scale to 0, while still taking full advantage of our managed platform for: database persistence, orchestration, clusters & namespaces management, teams management, agents & queues management, ACL & RBAC management.

Read on to find out what’s new, what’s changed, and what’s coming.

MLOps

Before diving into the new features of this release, it’s important to observe the changes over the last 2 years since the initial public release of Polyaxon.

The machine learning infrastructure landscape has seen several developments and changes, the emergence of new platforms and concepts, an increase of awareness of the importance of reproducibility, provenance, portability and collaboration, a better accountability and model governance, and finally, the graph oriented approach for data processing, machine learning experimentation, and model deployment. Some terms became the standard for describing the difference between traditional software CI/CD (DevOps), data processing, ETLs, and data analytics (DataOps), and machine learning operations (MLOps).

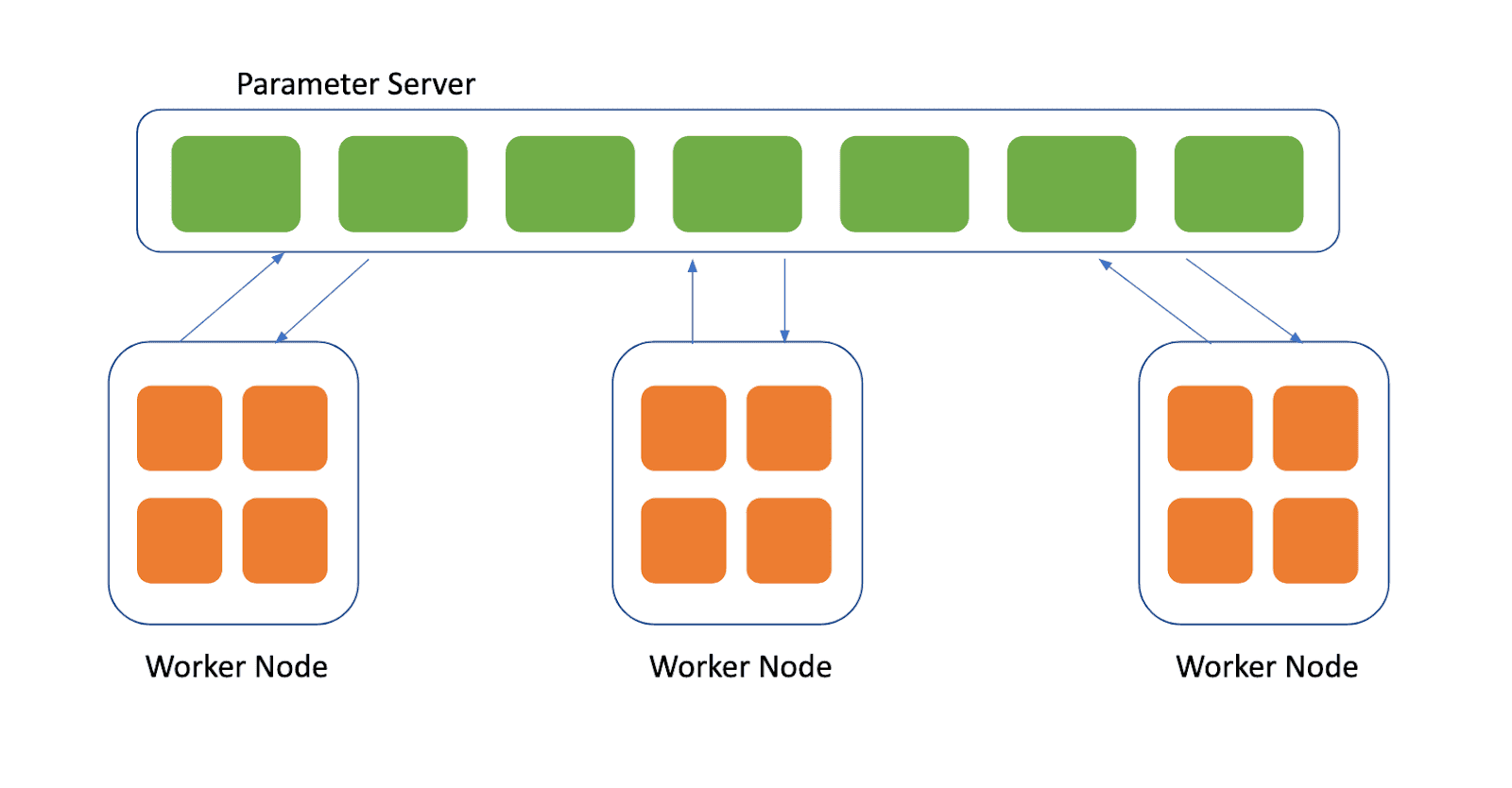

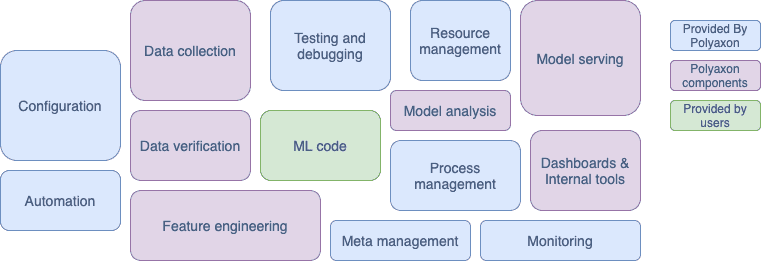

Figure 1. Elements for ML systems. Adapted from Hidden Technical Debt in Machine Learning Systems.

Figure 1. Elements for ML systems. Adapted from Hidden Technical Debt in Machine Learning Systems.

This new release is an attempt to reduce the complexity of the current machine learning lifecycle, and drive more productivity for it’s users.

One of the core use-cases of Polyaxon is that it’s used as a fast experimentation platform, data-scientists prefer Polyaxon to other platforms for the ease of use, however they are left to decide how to productionize their models. Often times, they will use platforms that were created initially for data pipelines instead of machine learning pipelines.

With this new release we are trying to simplify the process of going from an experiment to a robust and production-ready pipeline while keeping the experimentation phase as easy or even easier.

Polyaxonfiles are how users package a machine learning experiment, and although we added several features in this release, they are still very simple to author, compared to other systems where a data-scientist or a machine learning practitioner is forced to learn a completely complex system in order to author a simple experiment (e.g. Kubernetes’s manifests).

Polyflow

Primitives

In Polyaxon v1, users will only have to deal with 2 primitives: Component and Operation.

A component allows users to package most of the information about what they are trying to schedule on the platform, and an operation is how to run the component.

Example of a component for running an experiment.

kind: component

run:

kind: job

container:

image: tf-image

command: ["python", "-m", "main.py"]To run the experiment using the cli

polyaxon run -f my-experiment.yaml

The same component structure can be used to describe how to schedule: services, dags, parallel jobs and hyper-parameters tuning jobs, distributed tensorflow jobs, distributed pytorch jobs, distributed mpi jobs, ray jobs, and dask jobs, see further for more details.

Builds are not required anymore

In Polyaxon v0, a build step was required to start an experiment, this forced a specific way to use the platform, sometimes users who want to start an experiment without building a new image or by using their own build system for creating images, were forced to wait for another build that is scheduled by Polyaxon.

Polyaxon v1 by default does not build images and allow users to provide an image to use for running theirs jobs. For users who prefer to create a new image for each experiment, they can leverage a simple build & run DAG to achieve that:

kind: component

name: my-dag

description: build and run pipeline

run:

kind: dag

operations:

- name: build

hub_ref: kaniko

params:

destination: "{name}:{run_uuid}"

- name: experiment

dependencies: [build]

path_ref: ./my-experiment

params:

image: "{ops.build.outputs.destination}"You can see that the polyaxonfile is still very simple to use, and users don’t have to learn any concept apart from providing a command and/or a list of args for a container to start a job. Once the user needs to handle a more complex workflow, she will learn about how to modify a subsection of the manifest to achieve the desired behaviour. Of course a Polyaxonfile can grow in complexity if we are dealing with a dag with several operations, which can include distributed training operations, hyper-parameters tuning operations, and complex data processing operations. Polyaxon provides ways to split the logic, either in separate yaml/python files, or for components that achieve a level of maturity and are generic enough, they can be stored in the organization’s registry to be referenced by their name:tag similar to how you would use a docker image. For example, in this pipeline, kaniko is a component that is provided as part of the public registry: Polyaxon hub.

Initializers

Polyaxon v1 comes with a long awaited feature for initilaizing containers with: data, git repos, and dockerfiles.

Previously the only way to get code to a user container was to build a new image. When running a fast experimentation cycle, building an image is not always necessary, and could be an expensive operation, a user might just want to initialize the same docker image with different version of her code repo:

kind: component

name: my-experiment

description: some optional description

tags: [tensorflow]

run

kind: job

container:

init:

- {"git": {"url": "https://github.com/user/repo", "revision": "dev"}}

image: tf-image

args: ["python", "-m", "main.py"]Git initializers can be used also to build docker images with specific code repo in a similar fashon.

Another feedback that we received, especially from large teams where code is not hosted on the same repo, is using several repos in the same job or to build an image with several repos. With initializers users can build images or start jobs that require access to more than one repo. Additionally Polyaxon tracks the code reference of each repo and links them to the run for analytics and provenance reasons.

kind: component

name: my-experiment

description: some optional description

tags: [build]

run:

kind: job

container:

init:

- {"git": {"url": "https://github.com/user/repo1", "revision": "dev"}}

- {"git": {"url": "https://github.com/user2/repo2", "revision": "7d687ebac9bcf0f8013daa8805924b9ee1cd9b61"}}

image: my-build-logicDockerfile initializers are an easy way to generate dockefiles, sometimes users might not have a dockerfile in their repo, Polyaxon provides a simple dockerfile generator, this dockefile generator is similar to the one in Polyaxon v0 in the build step, with more feature to customize the behaviour, e.g.

kind: component

name: my-experiment

description: some optional description

tags: [tensorflow]

run:

kind: job

container:

init:

- git:

url: "https://github.com/user/repo1"

revision": "dev"

- dockerfile:

image: "base"

env: {KEY: val}

copy: {"/mypathath/": "/code/path"}

image: polyaxon-dockerizeArtifacts initializers are similar and allow users to download data either from the default artifacts store, from a volume, or a blob storage holding a dataset or some artifacts.

kind: component

name: my-experiment

description: some optional description

tags: [tensorflow]

run:

kind: job

container:

init:

- connection: my-s3-bucket

artifacts: {"files": ["path1", "path2"], "dirs": ["path3"]}Containers, init containers, sidecar containers

Polyaxon operation running on Kubernetes

Polyaxon operation running on Kubernetes

In previous versions of Polyaxon, users sometimes had to change some aspects about the underlying containers running in their clusters, and were asking about how they can modify some entry, e.g. dnsConfig. Polyaxon v0 did not expose any fields from the container specification, and so users were working around the platform’s limitations to run their jobs as they wanted.

In v1, Polyaxon exposes 100% of the container specification, most users will not have to use any of those fields, but for advanced use-cases the platform will not limit what users can do. In addition to that, Polyaxon supports now injecting custom init containers and sidecar containers.

Job

Previously in v0, Polyaxon exposed 3 primitives to run batch jobs (experiment, build, job), in v1, there’s one abstraction _job_ that will allow users to:

- build containers

- download or process data

- query a sql database or generate a dataframe

- train a model

Since components allow to package logic, several components will be provided for running usual and repetitive tasks, such building containers using kaniko or downloading an S3 bucket for instance. In case of a limitation, users will not have to wait for another Polyaxon release to fix an issue with a component, it should be easy to fork it and modify it to fix their use-case. It’s also possible to have different tag/version for the same component if the behaviour is better described that way than by passing a parameter to the component.

Service

Previously users had 2 interfaces for creating tensorboards and jupyter notebooks (with 2 backends).

A service is an improvement of this concept which provides a more generic way to create services and dashboards, using the _service_ section, users will not only be able to start notebooks and tensorboards, but also their own dashboards based on open source tools.

Since components allow to package logic, users will not have to learn initially how to create services, similar to Polyaxon v0, the platform will provide components for starting common tools, such as: notebooks, jupyterlabs, tensorboards,…

The idea is to use the amazing ecosystem of open source tools to drive visualization, build dashboards, and create internal tools in less than 2 hours.

Distributed workflows

Polyaxon introduced native distributed training for Tensorflow, Pytorch, MXNet and Horovod around march 2018, and an integration with Kubeflow operators in march 2019.

In v1, Polyaxon will focus on having a better experience and a native integration with distributed Kubernetes Operators:

- Polyaxon for Kubeflow: TFOperator, PytorchOperator, MPIOperator

- KubeRay

- Dask Jobs.

Running a distributed strategy is much cleaner in Polyaxon v1 and less confusing, e.g. an MPIJob instance:

version: 1.0

kind: component

tags: [mpi, tensorflow]

run:

kind: mpi_job

launcher:

replicas: 1

container:

image: mpioperator/tensorflow-benchmarks:latest

command: [mpirun, python, run.py]

worker:

replicas: 2

environment:

nodeSelector:

polyaxon.com: node_for_worker_tasks

tolerations:

- key: "key"

operator: "Exists"

effect: "NoSchedule"

container:

image: mpioperator/tensorflow-benchmarks:latest

command: [mpirun, python, run.py]

resources:

limits:

nvidia.com/gpu: 1

...Parallel workflows & Polytune

Polyaxon released its built-in hyper-parameters tuning service around march 2018, since then several users were able to maximize the usage of their infrastructure, and reduce the time to achieve better results instead of manually training models.

Parallel workflows are an improvement of the previous experiment groups, and allow to parallelize jobs or an entire pipeline using a parallel strategy.

Parallelism is not only useful for running hyper-parameters tuning, but could be leveraged to speed data processing, for example, a user who wants to query data from a table over the last 25 days, might run a parallel strategy to start 25 parallel jobs to query the data per day, in that use case, the user only has to create a component with logic for querying data based on a date entry, and since Polyaxon runs containers, there’s no limitation on the language used to create the component. This is extremely powerful, not only the component can be written in any language, the component logic is abstracted from Polyaxon itself and can have tests independent of the platform, moreover, data scientists or machine learning engineers do not need to learn for instance how to query a hive table, or how to query a marketing table, a data analyst who has much better knowledge about that domain can contribute that component in less than an hour of work, and provide a simple description, and documentation for the inputs and outputs, and the component can be used by all data scientist to pull data, to drive a visualization, an analysis, or for creating a model.

Polyaxon also provides a way to set the maximum concurrency, and in that case the user might set the concurrency to 5 to limit the number of parallel jobs to 5 at a time until it finishes all 25 jobs.

Polyaxon provides several parallel strategies:

- Grid search.

- Random search.

- Hyperband.

- Bayesian optimization.

- NEW Mapping: a user defined list of dictionaries containing the params to pass to a component, also, the dictionary can be constructed from the outputs of other upstream jobs.

- NEW Hyperopt.

- NEW Customizable interface for creating an iterative optimization logic.

DAGs

The v1 release brings native pipelining to Polyaxon users. A pipeline is graph of dependencies between components (inline components or hosted on Polyaxon hub / an organization’s private registry).

Polyaxon DAGs are very simple to build, and are oriented for machine learning workflows instead of generic wokrflows.

A DAG in Polyaxon is a normal component that follows the same logic as jobs, services, distributed jobs, or parallel jobs.

Since a DAG is a list of other components with dependencies, Polyaxon DAGs can be naturally nested, they can also include a service, a job, a hyper-parameters tuning job, a distributed job, or any complex combination of them.

Most other pipeline engines do not support these combinations naturally, leaving the users with the task to create and manage that logic, here are some examples that cannot be managed by a general purpose workflow engine:

- Doing a bayesian optimation logic, the step can not naturally operate that logic, so another system (another layer of abstraction) must be brought to incorporate that logic.

- Create a distributed job, since the system cannot natively operate the job, the step will only operate a watch loop. Stopping or retrying that step is almost impossible to do automatically.

- possibility to start a Tensorboard if the Tensorflow job started successfully, and stop that service once the experiment finishes.

The list of examples is very long, and that was one of the reasons to create a simple interface to allow users to run modern ML pipelines without introducing too many leaky abstractions. The fact that Polyaxon supports these combination in natural way allows users to create nested DAGs, Map-reduce style pipelines (i.e. the downstream operation expects the outputs of a parallel operation), start an operation as a result of Ray Job, and many other use cases that are not common for generic flow engines.

DAGs in Polyaxon do not have to be synchronized or saved to be run, in Polayxon they can be used off schedule, and change as often as they need to, before they can be registered as a production pipeline.

Early Stopping

Previously Polyaxon had a simple early stopping logic for hyper-parameters tuning based on a metric, or a list of metrics, therefore, groups had always a status done independently of the how many jobs failed.

In Polyaxon v1, users have much more power over how to terminate a parallel strategy, hyper-parameters tuning strategy, or a DAG strategy as well as the status to set when the condition is met.

Metric early stopping strategies:

metric: metric_name

optimization: maximize or minimize

policy: ValuePolicy | MedianStoppingPolicy | AverageStoppingPolicy | TruncationStoppingPolicy

status: status to set when the policy is metFailure early stopping strategy:

percent: percent of failed jobs in prallel/dag strategy to stop the parent entity

status: status to set when the policy is metScheduling strategies

Polyaxon provides several strategies for scheduling components. These scheduling strategies are not restricted to pipelines, and can be used for any workflow.

- Interval Schedule.

- Cron Schedule.

- Exact Time Schedule.

- Repeatable Schedule.

Components & Polyaxon hub

We briefly talked about components and operations. For most experimentation situations, users will be changing the polyaxonfiles to create new runs. But in cases where a component might reach a level of maturity and reusability, it could be saved so that other users can use it with very simple documentation.

An example of these components:

- data processing components based on some inputs (e.g. bucket or path).

- sql queries that can be described and parametrized.

- dashboards for sharing an analysis.

- dashboards for productionizing an ML model as an internal tool.

- an annotation component that expects a list of images or a corpus of text.

The list of examples is long, and this is the first step to not only bring several employee types to contribute to a data driven culture in the company, but also a very effective way to create simple internal tools, using the language of their choice in less than 2 hours.

This component abstraction coupled with the RBAC and ACL layer that Polyaxon provides, can allow admins to effectively control how users can access data, databases, and other connections, Polyaxon checks access to a certain connection before scheduling the components.

Pod specification, Termination, & cache

Among the most common issues that current users have with Polyxon v0, is termination, retries, TTLs, and pod restart policy.

Polyaxon v1 has a huge improvement in this area, and provides better tools for setting these parameters. And most of the reconciliation logic has been improved significantly both in terms of resources consumption and in terms of robustness.

Similar to the new support for the container specification, Polyaxon v1 has much better support for the pod specification in the environment section.

Finally, users can now have much better control on the cache layer and the invalidation logic. Several users were struggling with experiments that require a full rebuild, and were complaining about how the cache works. In v0 there was only one way to do that using the nocache flag in the cli.

In v1, Polyaxon has a much more explicit and customizable cache interface:

disable: (bool) disable the cache for this component

ttl: (int) invalidate the cache after a timedelta

inputs: (list[str]) a list of inputs names to consider for creating the cache stateBy default, all runs are cached, unless disable is set to True. If you take again the example of the parallel strategy where the user has to query a table, if one of the 25 jobs fails, rerunning the group will only trigger the job that failed.

Tracking, visualization, and dashboarding

Polyaxon tracking is updated and can track more metadata compared to Polyaxon v0. Furthermore, the logic for storing metrics has changed to remediate the growing size of the database, several clusters, have more than 3 million tracked experiments, several teams using Polyaxon do not have devops experience, and several complained about slower dashboards or the query time once they reach that point, we posted 2 recommendation for those users:

- Scale the node holding the database from 1 vcpu to 2 or more, and increase the memory.

- Clusters should have ideally less than 500 000 experiments per project.

In Polyaxon v1, we improved logs and metrics storage:

- Logs were by default stored in a blob storage, but now they have better sharding for long running jobs to increase the responsiveness.

- Metrics (time series) are now stored in a blob storage as well, with an index stored on the database.

This will decrease significantly the size of the database without hurting visualizations or dashboards.

Additionally, we are working on a new tool that allows users to easily drive visualizations programmatically in notebooks, a streamlit dashboard, or other open source tools.

Polyaxon Commercial offering

I am very excited to announce the upcoming Polyaxon Cloud, Previously we offered a service, PaaS for private clouds, but we had one big issue, it was hard to operate, and required human intervention.

In Polyaxon v1 we are open-sourcing the agent logic, this is an effort to provide all user interfaces and user facing logic as open source tools.

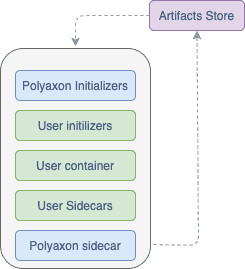

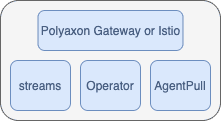

Polyaxon agent runs on the users’ cluster

Polyaxon agent runs on the users’ cluster

Polyaxon agents interact with Polyaxon cloud (control plane) to schedule jobs on-prem or any cloud provider without ever needing to have access to the users’ code, data, models, metrics, or logs.

Jobs and services can talk to an agent or to the control plane depending on the nature of information they need to communicate.

Agents and your clusters do not need to receive any connection from the outside world, and Polyaxon can fully function within your protected network.

Polyaxon can manage multiple queues, multiple namespace and multiple clusters for teams with full isolation requirement.

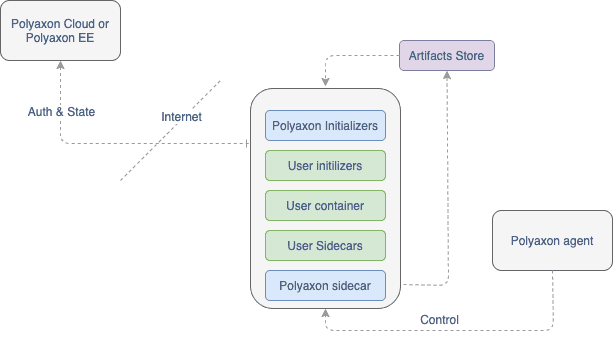

Polyaxon job/service running in hybrid mode

Polyaxon job/service running in hybrid mode

When an agent starts a run (a job or a service), the agent will authenticate the run and grab a scoped token so that the user can interact with the control plane or the agent with specific permissions, and to authorize the run to access the artifact stores and defined connections.

Learn More about Polyaxon

If you are interested in our cloud service, please contact us for early access.

Polyaxon continues to grow quickly and keeps improving and providing the simplest machine learning layer on Kubernetes.

To learn more about all the new features, fixes, and enhancements coming in v1, follow us on