A metadata store is a central repository for storing all data generated in the process of building machine learning models. This data includes data and artifact versions, model versions, model parameters, model evaluation metrics, CPU and GPU utilization, logs, stage transitions, just to mention a few.

What is a metadata store?

There are various steps taken when building machine learning models. For instance, data pre-processing, model development, and fine-tuning the model, to mention a few. These steps generate some information such as:

- Model parameters.

- Model versions.

- Data set versions.

- Model evaluation metrics.

- Model evaluation charts.

Once the model development is complete, you put the model into production. Production models need to be monitored and retrained based on their performance in the real world. It would be challenging to manage such a model if one didn’t save the information generated above. For example, it would be difficult to retrain a model without knowing the data it was trained on. Furthermore, it is exhausting to reproduce the model’s results without knowing the parameters used to train it.

Have you ever felt like you lost track of experiments you have done before during the development of your ML project? Have you ever found yourself trying to remember the results you produced days ago in the same project? Or have you ever manually written down some project results into a paper or Google Docs while developing? Have you ever needed to use a dataset or an ML model for your project that your colleagues created previously?

This is where a metadata store comes in. It helps you to track your experiments as well as share your datasets and models with other team members in a simple way. However, let’s start by discussing ML metadata before diving further into the metadata store.

What is ML metadata?

Machine learning model development is an iterative process that leads to metadata creation at each stage. Some of the content is created by the various tools used to develop the model, while the engineer creates other metadata and content to make it easy to collaborate with other team members. This metadata also includes:

- Models code.

- Notebooks.

- Dataset samples.

- Prediction samples.

- Model type.

- Model training logs.

- CPU and GPU utilization.

- Where the model was executed, locally or on some cloud infrastructure.

- How long it took to train the model.

- The team member who trained the model.

- Documentation of the model development.

The above content shows some of the data generated during the model development process, but it’s not exhaustive. Therefore, a metadata store is a central repository for storing all data generated in the process of building machine learning models.

ML metadata store solutions

A metadata store enables you to store and track meta-information about your machine learning projects and experiments. You can keep this information in a text file or even a spreadsheet. However, a better solution is to use a managed metadata store that allows you to store all the meta-information and collaborate with your teammates.

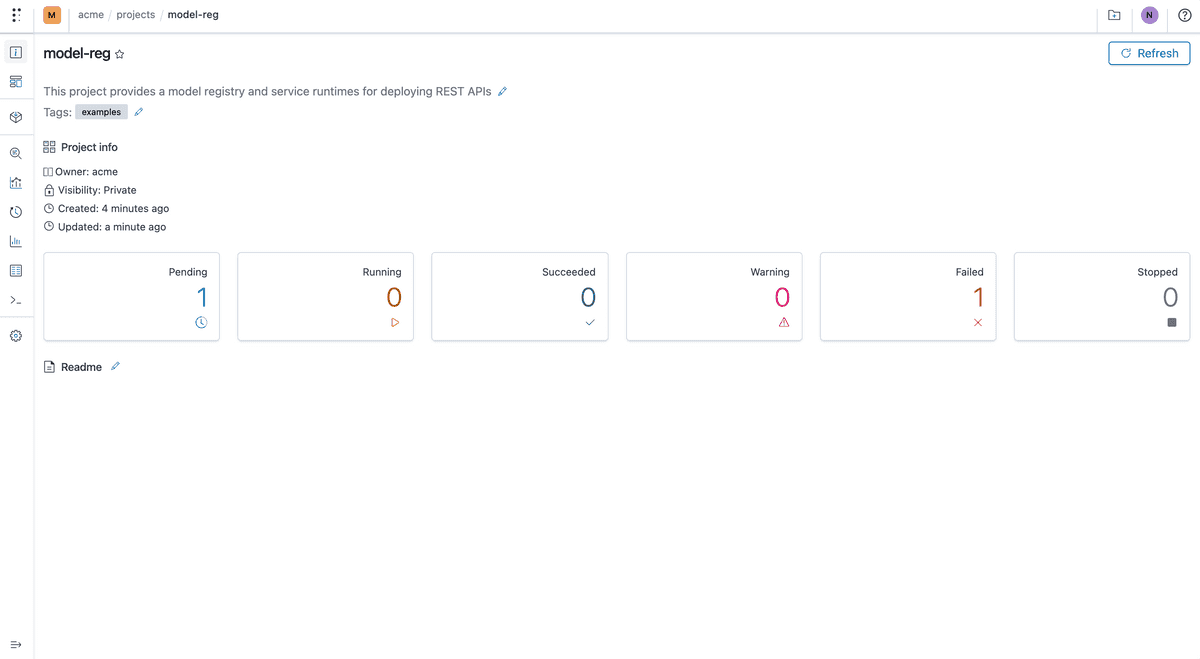

Polyaxon provides an open-source SDK for building, training, and tracking your machine learning metadata, including ML models and datasets with semantic versioning. Polyaxon also provides extensive artifact logging and dynamic reporting with a seamless local and cloud integration.

With Polyaxon, you can perform simple experiments on your local machine and quickly move to cloud infrastructure. For example, you can perform simple experiments locally for a computer vision project and quickly move to a remote Kubernetes cluster to use more GPUs. Polyaxon will track and store your ML metadata whether you are working locally or remotely.

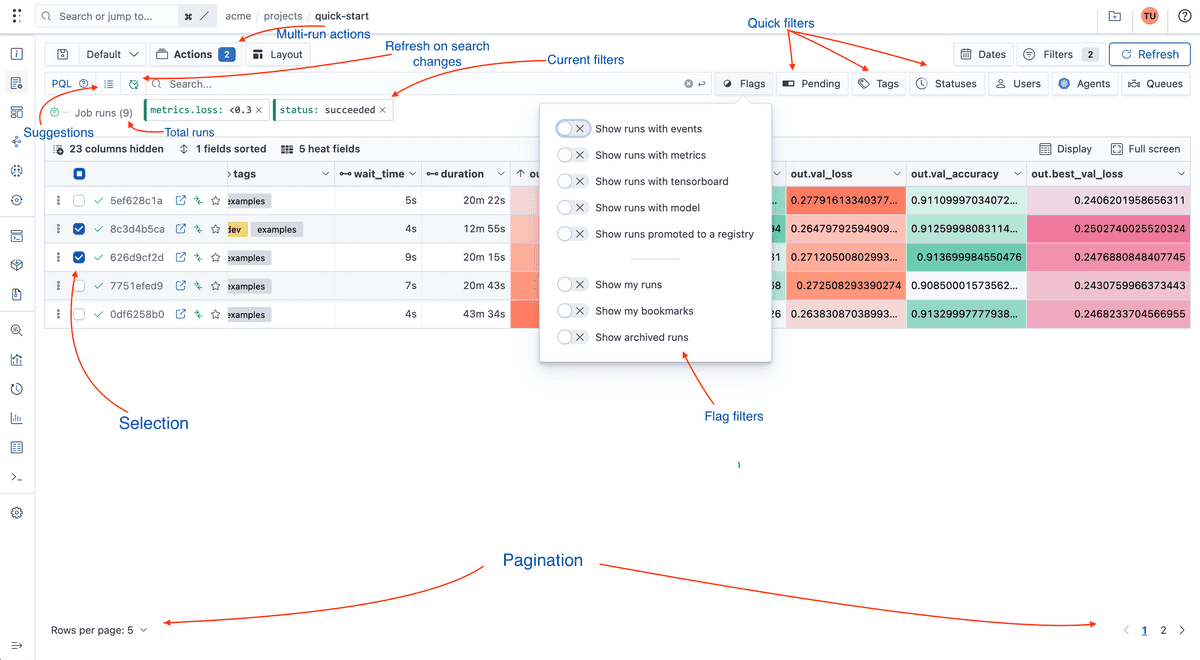

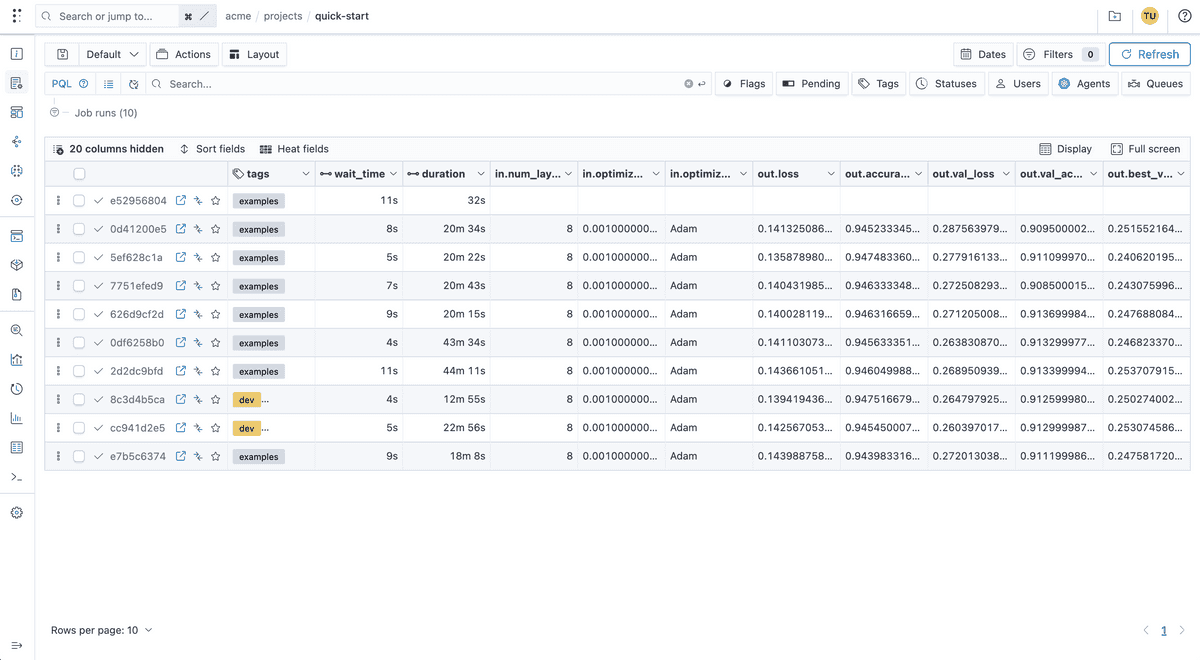

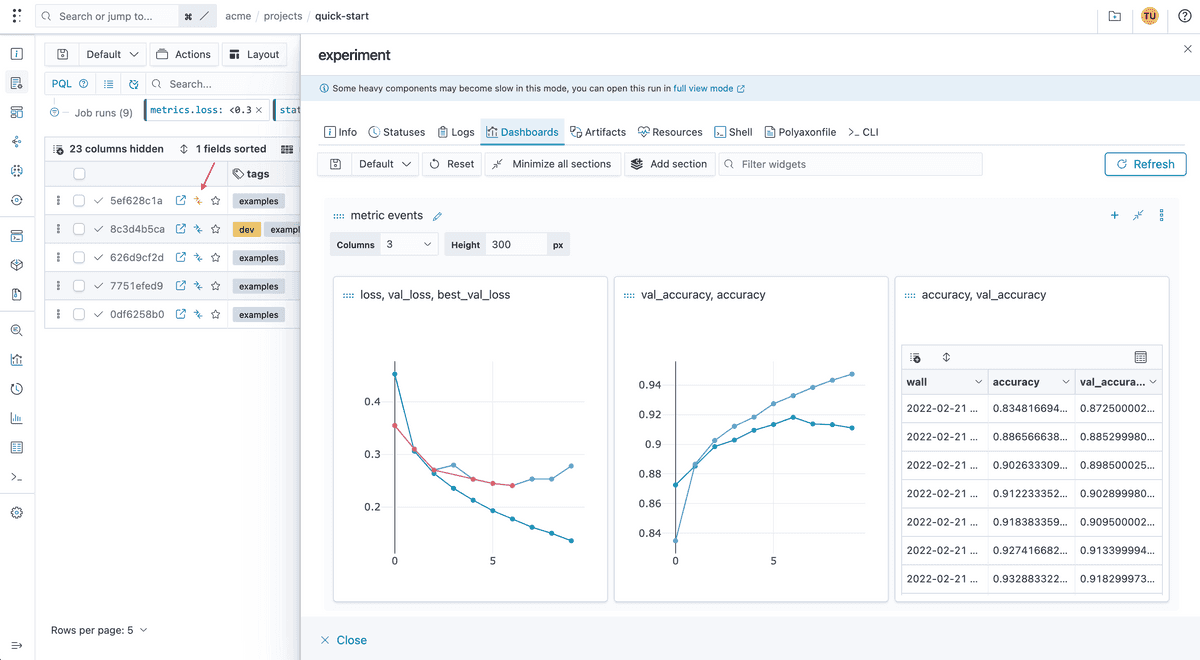

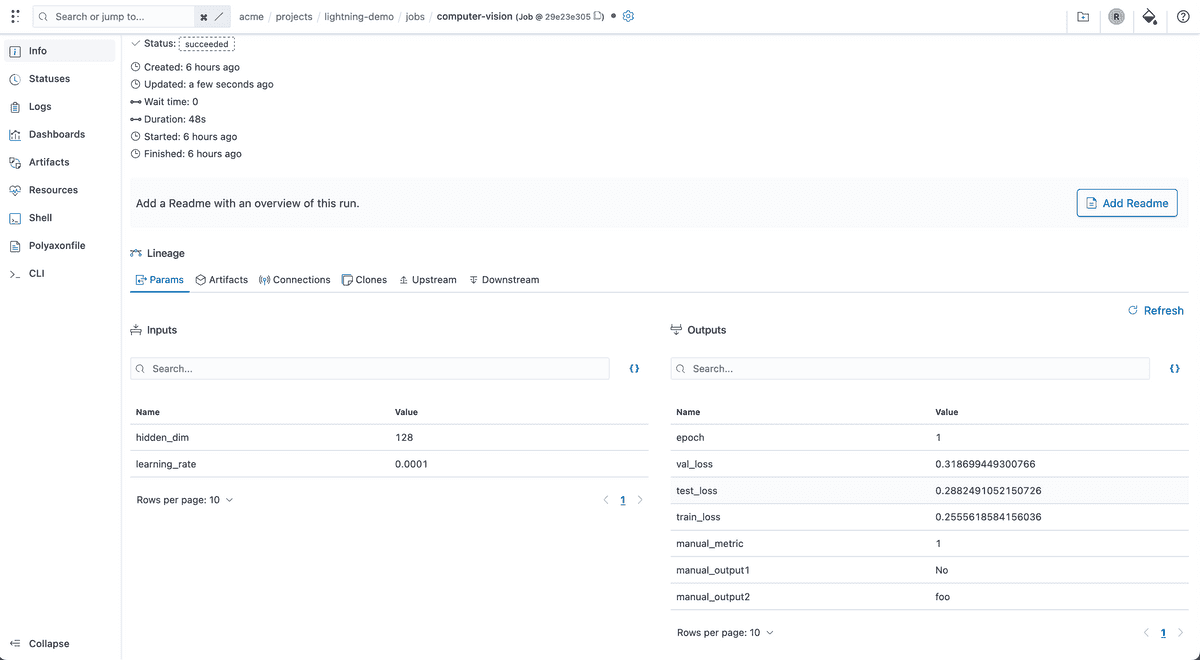

Furthermore, Polyaxon allows you to compare experiments to better understand the model’s performance on different runs. You can also fetch model parameters enabling you to reproduce experiments. In a nutshell, Polyaxon provides a central place to manage all information relating to your machine learning model development process.

Metadata stored by Polyaxon

Polyaxon stores various types of model metadata. Let’s look at some of them.

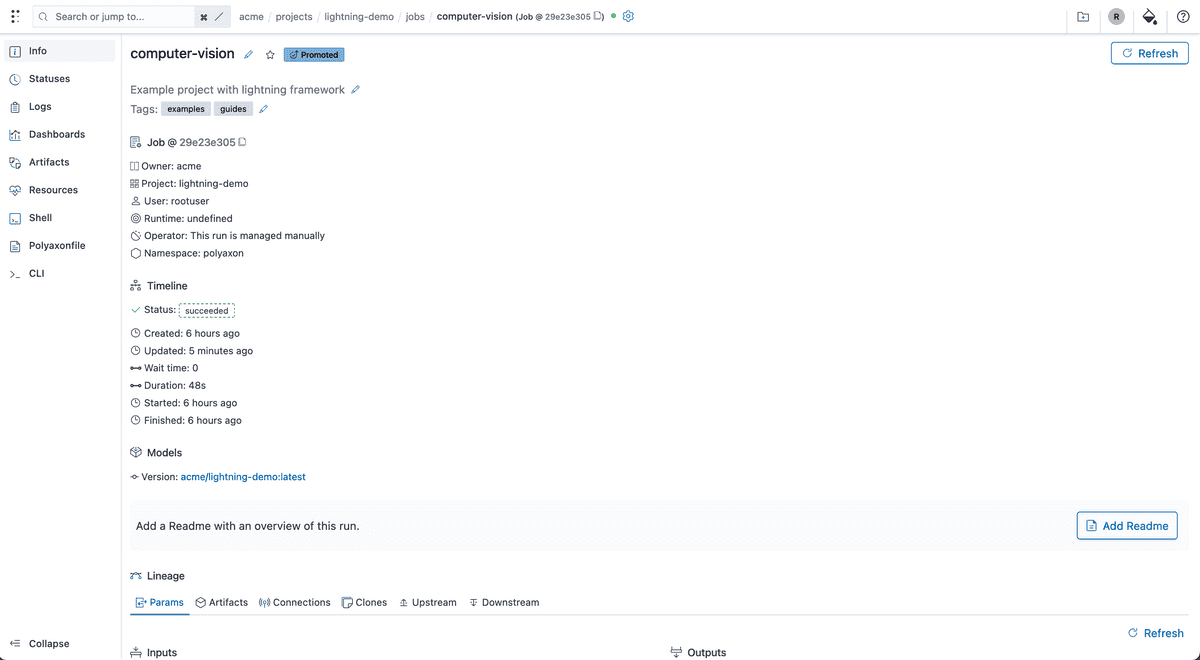

Metadata about experiments and model training runs

Polyaxon allows you to store metadata about experiments and model training runs. This information includes but is not limited to:

- Dataset version used for model training.

- Model hyperparameters yielding the best results.

- Training loss and metrics to understand if the model is learning.

- Testing metrics and loss to quickly see if the model is overfitting.

- Model predictions to get a rough idea of its performance.

- Hardware metrics to inform you of GPU and CPU utilization.

- Performance charts such as accuracy and loss plots, confusion matrix, Precision-Recall Curve, ROC curve, etc.

- Package versions to ensure the project runs without failing.

- Model training logs to make it easy to debug the project.

- Information that is specific to the domain of your problem.

Metadata about trained models

Polyaxon stores information regarding the trained models too. This information includes:

- The person who trained the model.

- Model version.

- The infrastructure used to train the model could be local or cloud.

- Machine learning packages used to train the model, for example, TensorFlow, PyTorch, Scikit-learn, etc.

- Model description.

- When the model was trained.

- How long it took to train the model.

Polyaxon also stores the resulting model and makes it accessible for immediate use. You can fetch the model and use it for predictions right away.

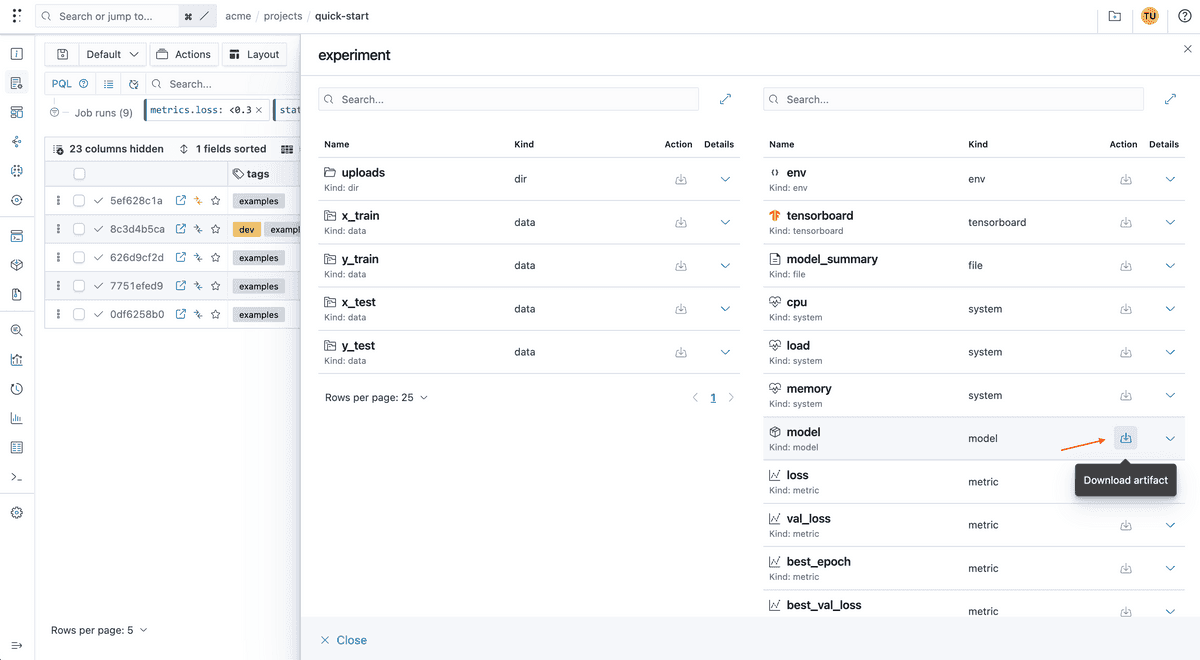

Metadata about datasets

Polyaxon automatically saves various information when you create datasets. You can also save other information types. This dataset metadata includes:

- The person who created the data.

- How long the data execution took.

- The infrastructure used to create the dataset.

- Dataset description.

- Dataset version.

- Dataset summary statistics, such as the number of rows and summary charts.

- The size of the data.

- Dataset execution logs.

- Any other data you would like to log about the data.

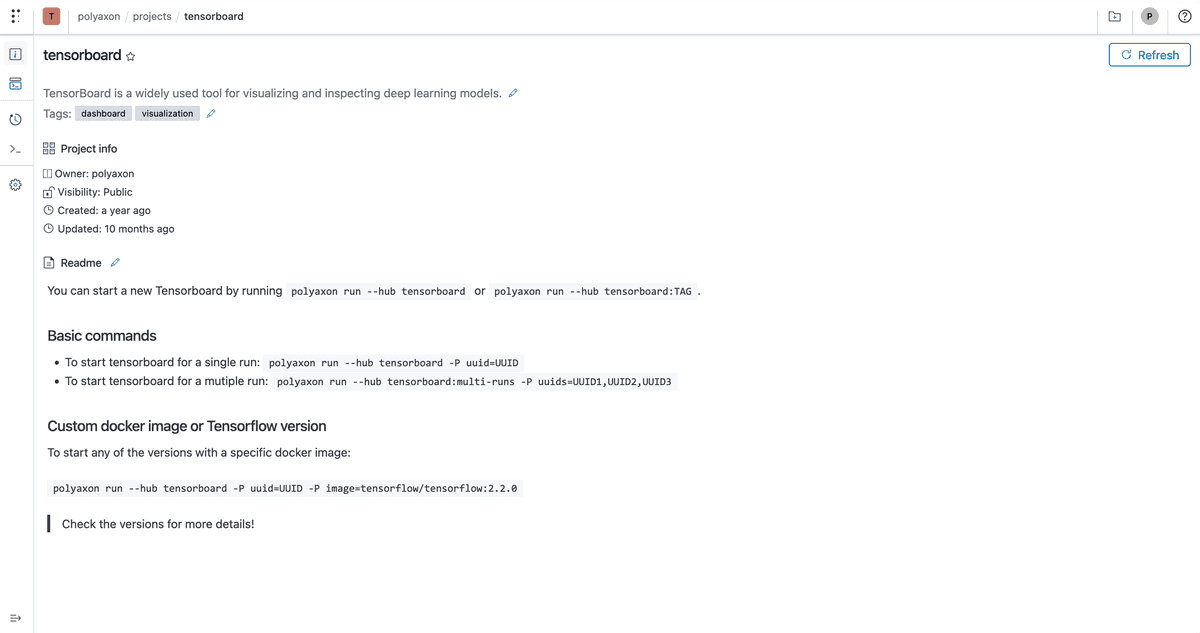

Document machine learning projects

Documentation is critical, especially when working in a team. Documenting a project makes it easy for teammates to contribute to a project. It also makes it easy for you to come back to the project in the future. In a company setting, it also makes it easy to onboard new team members to a project.

Polyaxon was built with this in mind. Polyaxon provides project, run, artifact, component, and model pages for documenting all entities. The documentation is provided in a README file.

Polyaxon also allows you to embed dynamic content to the README file. The dynamic content will appear on the project’s page. For instance, you can embed model metrics, parameters, comparisons between different projects, and sample predictions on the page.

Why manage metadata in ML metadata store?

Storing machine learning metadata from experiments is important for a couple of reasons:

- Enables you to compare results from different experiments.

- Enables collaborative working within a team of data scientists by making processes more transparent and breaking work in silos.

- Reproduce previous experiments.

- Visualize results from different experiments.

- Easily find problems/errors in the model development process.

- Fetch specific dataset versions used for model training.

- Quickly fetch different model versions and use them for prediction.

Final thoughts

A machine learning metadata store is critical for machine learning teams that run a lot of experiments. Apart from storing all metadata about your projects, it makes it easy to collaborate on projects and reproduce results. Polyaxon metadata store is unique because it enables you to transition from local to cloud development by adding a single line of code to your dataset and model functions.

Visit Polyaxon to start building now! Don’t forget to join our Slack community, and follow us on Twitter and Linkedin for resources, events, and much more.