Many users reported issues with experiment getting stuck during the hyperparameter search, after some investigation, we found that some events were dropped. This newest version includes a fix for this issue as well as other improvements and features. Notable additions in this release include three important features: instrumentation for experiment outside of Polyaxon, customizable charts and visualizations, and better security and experiment runs’ scoping.

These new features mean increased flexibility, stability, and security. The release also means the increasing maturation of platform and the flexibility from the developer side to handle experimentation on other platforms, while consolidating all results in one dashboard.

Let’s dive into the key changes of this release:

Experiments stuck in the ”**scheduled”** status

Many users reported that when running hyperparameters tuning, they noticed issues with experiments stuck in the scheduled status, the experiments were, in fact, running and reporting updates but their statuses were not getting updated. This issue only happens in some specific deployments, where the resources allocated to the events handler are limited. This release introduces a fix for this issue, and we are still investigating if other issues could potentially impair the performance and stability of the platform.

Tracking and instrumentation

We are very pleased to introduce a new tracking and instrumentation in Polyaxon. Many teams were asking if they can run experiments on their local machines while waiting for other resources on the cluster to be available. One issue they had was that they could not track and report the progress of their experiments to compare with other experiments on Polyaxon. Although it was possible to use the REST API or the python client to report the experiments’ progress, it was not very intuitive and required some engineering to have a working workflow.

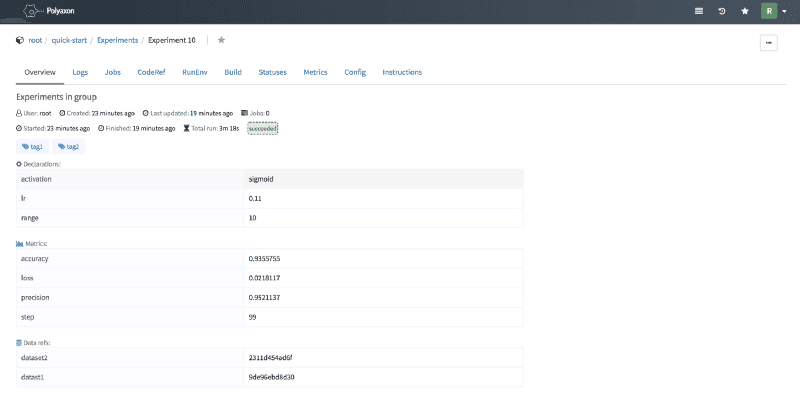

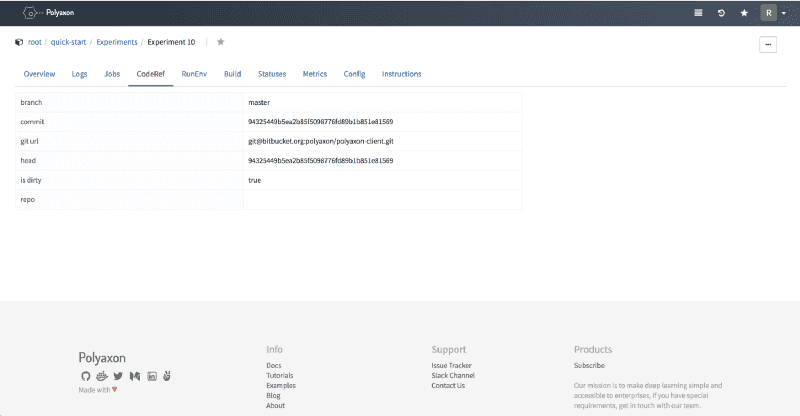

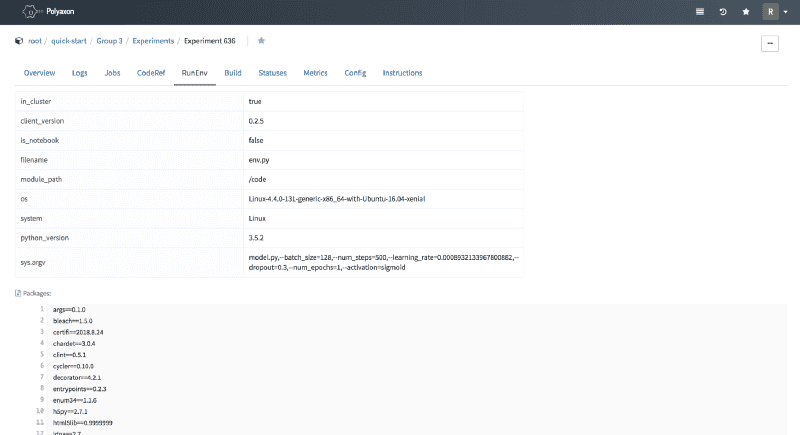

This release introduces a simple workflow for Polyaxon users to report data reference, code reference, run environment, tags, params, and metrics to Polyaxon in a similar way to what they would do in an in-cluster experiment.

Here’s an example of how to track an experiment in-cluster:

from polyaxon_client.tracking import Experiment

experiment = Experiment()

experiment.log_metrics(loss=0.02, accuracy=0.89)Here’s how to report the same and more information in an experiment running outside of a Polyaxon deployment:

from polyaxon_client.client import PolyaxonClient

from polyaxon_client.tracking import Experiment

client = PolyaxonClient(host=IP_ADDRESS, token=TOKEN)

experiment = Experiment(client=client, project='project1')

experiment.create(description='Experiment outside of Polyaxon')

experiment.log_tags(['tag1', 'tag2'])

experiment.log_params(activation='sigmoid', lr=0.01)

experiment.log_data_ref('dataset1', dataset1)

experiment.log_data_ref('dataset2', dataset2)

...

experiment.log_metrics(step=i, loss=0.02, accuracy=0.89)The only difference is that the user needs to configure the client

client = PolyaxonClient(host=IP_ADDRESS, token=TOKEN)For experiment running in-cluster, this step happens automatically.

Debug mode for experiments and jobs

Often times, having a bug in an experiment, users find it very hard to debug the source of the issue since Polyaxon tries to free the cluster resources as soon as a run reaches a done state. In this new release, we introduced a new option for users to keep the Kuberenetes resources so that they are able to debug issues with their runs.

To keep a run for 60 seconds for example after it failed, you can use the — ttl option:

polyaxon run -f polyaxonfile.yml --ttl=60Customizable charts

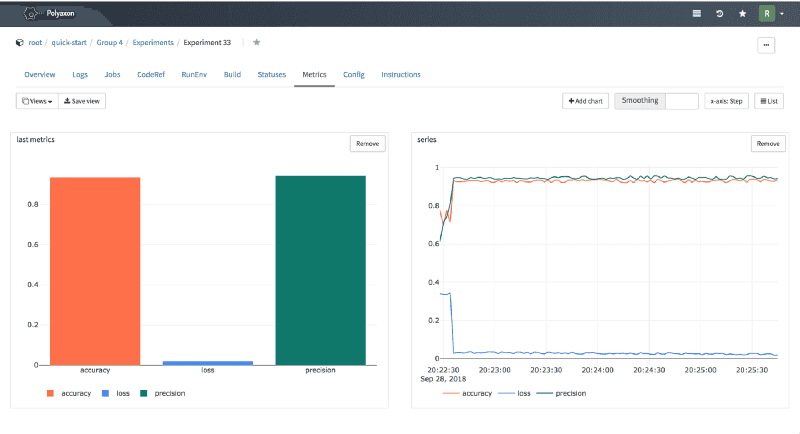

We improved our visualizations, this is a feature we wanted to make for a very long time. In the 0.2 we announced that for the 0.3 release we will focus on new and improved visualizations, experiments comparison, and model analysis. This is the first step and we are very excited about it, the objective is to give users an end to end tracking and visualization experience for both experiments running on Polyaxon and other platforms.

You can create different types of charts to compare one or more metrics, and you can save different chart views containing different widgets.

Additional Notable Feature Updates

We extended the query spec api to save metrics/params columns for experiment searches.

There was an issue with the cluster api returning nodes that were disabled, we are currently filtering out the disabled nodes.

We extended the failure events with a traceback in case an error happened during the scheduling to get more context.

We enhanced the tracking experience with data reference and run environment.

We fixed some UI issues noticed in some browsers.

Get Involved

The simplest way to get involved with Polyaxon is reporting issues and feedback.

The platform aims to simplify deep learning and machine learning on Kuberenetes, and we welcome you to contribute to an aspect that aligns with your interests, e.g. some users are asking for Java and R clients.

Thank you for your continued feedback and support.