Polyaxon currently supports and simplifies distributed training on the following frameworks: Tensorflow, MXNet, and Pytorch.

To parallelize your computations across processes and clusters of machines, you need to adapt your code and update your polyaxonfile to specify the cluster definition. Polyaxon then takes care of creating the tasks and exports necessary environment variables to enable the distributed training.

The current specification provides a section called environment to customize the computational resources and the distribution definition.

By default, when no parallelism is specified, Polyaxon creates a single task to run the experiment defined in the polyaxonfile. Sometimes users wish to specify the resources for the master task. e.g. using gpus

environment:

resources:

cpu:

requests: 1

limits: 2

memory:

requests: 256

limits: 1024

gpu:

request: 1

limits: 1This same section is also used to specify the cluster definition for the distributed training. Since the parallelism is performed differently by the different frameworks, in this guide we will describe how to define a cluster for each one of the supported frameworks.

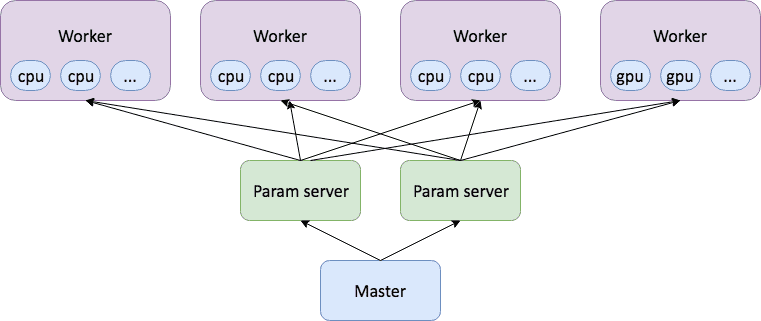

Distributed Tensorflow

To distribute Tensorflow experiments, the user needs to define a cluster, which is a set of tasks that participate in the distributed execution.

Tensorflow defines 3 different types of tasks: master, workers, and parameter servers.

To define a cluster in Polyaxon with a master, 2 parameter servers, and 4 workers, we need to add a tensorflow subsection to the environment section:

environment:

...

tensorflow:

n_workers: 4

n_ps: 2As it was mentioned before, Polyaxon always creates a master task.

You can have more control over the created tasks by defining the resources of each task the same way we defined the resources for the master.

Here’s the same example with custom resources:

environment:

resources:

cpu:

requests: 1

limits: 2

memory:

requests: 256

limits: 1024

gpu:

request: 1

limits: 1

tensorflow:

n_workers: 4

n_ps: 2

default_worker:

resources:

cpu:

requests: 1

limits: 2

memory:

requests: 256

limits: 1024

gpu:

request: 1

limits: 1

worker:

- index: 2

resources:

cpu:

requests: 1

limits: 2

memory:

requests: 256

limits: 1024

default_ps:

resources:

cpu:

requests: 1

limits: 1

memory:

requests: 256

limits: 256The master’s resources is defined in the resources section, i.e.

resources:

cpu:

requests: 1

limits: 2

memory:

requests: 256

limits: 1024

gpu:

request: 1

limits: 1The third worker (worker with index == 2) has a specific resources definition:

worker

- index: 2

resources:

cpu:

requests: 1

limits: 2

memory:

requests: 256

limits: 1024And all the other workers have the same default worker resources definition, i.e.

default_worker:

resources:

cpu:

requests: 1

limits: 2

memory:

requests: 256

limits: 1024

gpu:

request: 1

limits: 1Same logic applies to the parameter servers with the default_ps and ps.

Distributed MXNet

In a similar fashion, MXNet defines 3 types of tasks: scheduler, workers, and parameter servers.

As explained before, the master section will have in this case the scheduler role, the rest of the cluster needs to be defined in an mxnet subsection:

environment:

...

mxnet:

n_workers: 3

n_ps: 1And in a similar fashion you can customize the resources of each task the same way it was explained in the tensorflow section.

Distributed Pytorch

Distributed Pytorch is also similar but only defines a master task (worker with rank 0) and a set of worker tasks.

To define a Pytorch cluster in Polyaxon with a master and 3 workers, we need to add a pytorch subsection to the environment section:

environment:

...

mxnet:

n_workers: 3You can customize the resources of each task the same way it was explained in the tensorflow section.

Examples

You can find examples of distributed experiments for these frameworks in our examples github repo.