Overview

Kubernetes(K8s) is an open-source orchestration software for deploying, managing, and scaling containerized workloads and services. Containers hold the entire runtime environment, that is, a batch job, a service, or an application and all of its dependencies, libraries and configuration files. This makes them portable and predictable across different computing environments.

Given that machine learning workload are resources intensive and that data-rich environments translate to complex dependencies, data-science teams are adopting cloud native and containerization software development approach because of its speed and flexibility and ability to manage containerized environments at any scale. Kubernetes in particular has become the de facto standard for cloud-native application development and orchestration largely because of its speed and modularity.

Polyaxon is built on top of the kubernetes ecosystem to provide:

- Containerized workloads and increased automation

- The write-once, run-everywhere concept and the elimination of complex dependencies or incompatibilities in or across different systems

- Shared responsibility for managing deployments and data-scientists ownership of their operations and pipelines

- Easier continuously deployment story through fully automated rollouts and rollbacks with fine-grained observability and no downtime/minimal end-user disruption

- Faster feedback through Continuous code deployment and near-instant feedback

Kubernetes offers developers the possibility to code and release faster and more predictably. Once the different steps involved in containerizing code and deploying it via Kubernetes are broken down, Kubernetes’s benefits, such as its cloud-agnosticism, zero-downtime deployment, health checks, autoscaling, and tooling, become clearer. Development becomes simpler, and developers maintain flexibility and control.

Containerized development is a fundamentally different way of designing and packaging software that requires the creation of an effective development environment and Kubernetes development tools. While Kubernetes promises greater simplicity and autonomy, developers need the right tools, practices and configuration to be productive and to establish a fast development feedback loop. While Kubernetes may streamline development itself, the development environment becomes somewhat more complex.

This added complexity includes developers sharing responsibility for various aspects of the full application life cycle, which is typically operational in nature. Moving to Kubernetes helps introduce automated and replicable deployment processes, which are part of the initial complexity, but ultimately give development teams the freedom to focus their time on development and on shipping software while also giving them a better understanding of and control over what is going on with their deployments.

Originally designed and created at Google to help developers ship and scale cloud-native applications, Kubernetes provides container orchestration. The goal of Kubernetes is to help development teams reduce, or at least manage, the complexity of scaling their container infrastructure.

Core concepts

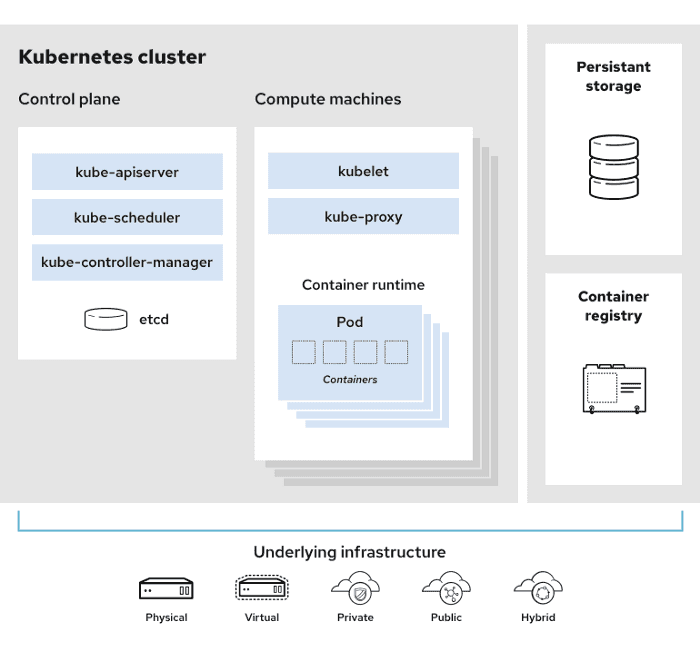

To get started with Kubernetes there is some basic terminology that will probably already be familiar to developers, such as clusters, nodes and pods.

-

A cluster is a set of nodes for running containerized workload.

-

Nodes are VMs or physical servers where Kubernetes runs containers. There are two types:

- Master nodes are home to ‘control plane’ functions and services and where the desired state of a cluster is maintained by managing the scheduling of pods across various worker nodes.

- Worker nodes are where the workload actually runs.

-

Orchestration: The master nodes contain the control plane and consist of the following:

- API Server: The interface that users and other tools interact with.

- Scheduler: Decides in which nodes the pods to be employed.

- Store: Where the cluster’s state and data are stored.

-

Worker Nodes: Where the workloads runs, each node has:

- Kubelet: An agent that makes sure that containers are running in a Pod. The kubelet takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy.

- Container runtime: The container runtime is the software that is responsible for running containers.

-

Workloads: Kubernetes provides several built-in workload resources, these are the most relevant for a Machine learning workload:

- Deployment (and ReplicaSet) define is how pods should be deployed, any Pod in a Deployment is interchangeable and can be replaced if needed.

- Jobs(and CronJob) define tasks that run to completion and then stop.

- Services define the abstraction to expose a pod or a deployment (multiple Pods) as a network service.

- Pods are the smallest, most basic unit of deployment in Kubernetes.

- Container is the common requirement for all workload. To run any workload in Kubernetes, it first needs to be packaged and run as a container. And these containers become pods or parts of pods. A pod can consist of one or more containers. A pod, being the smallest unit Kubernetes can run, is what Kubernetes recognizes, so if a pod is deployed or destroyed, all the containers inside of it are started or killed at the same time.

Benefits of Kubernetes for Machine Learning teams

Kubernetes is a solution that manages workload across multiple servers, and provides interfaces to facilitate its cluster architecture. Workloads get distributed to the nodes while being managed by a control node.

For data science and machine learning teams, the benefit of using Kubernetes as an interface allows scalable and serverless access to GPUs and CPUs and helps with infrastructure abstraction. Data-scientists and machine learning engineers can leverage the container abstraction to make their experiment’s execution and runtime portable and reproducible, while accessing to a scalable and cost-effective compute infrastructure.

Learning the basics of packaging an application or a batch job into container allows to submit and schedule the workload, Kubernetes then manages all the underlying infrastructure considerations.

The serverless aspect does not mean the absence of servers. It just means that servers are abstracted and managed through Kubernetes’s orchestration layer. Even with all the advantages Kubernetes brings, users still need to manage the underlying servers, or at least actively think about how to expose their nodes. Even in a managed Kubernetes cluster nodes management and organization is not completely eliminated from the picture, the team will still have to provision and manage worker nodes for the various data science and machine learning projects.

Serverless Machine Learning Workloads in Kubernetes

With Polyaxon, users do not have to actively think about the cluster, nodes, how to move code from git repos or local folders, or how to initialize secrets and data. Polyaxon builds on top of Kubernetes and provides the necessary interfaces to provide a very simplified experience.

Although the deployments and management will be carried out by Polyaxon through a direct communication with the Kubenretes API, users would only be exposed to a much simpler interface.