Overview

Kubernetes promises to schedule and ship machine learning workload faster, however, there are a few things data-science and machine learning teams need to know to get from code to container to a batch job or a service running on Kubernetes.

Process

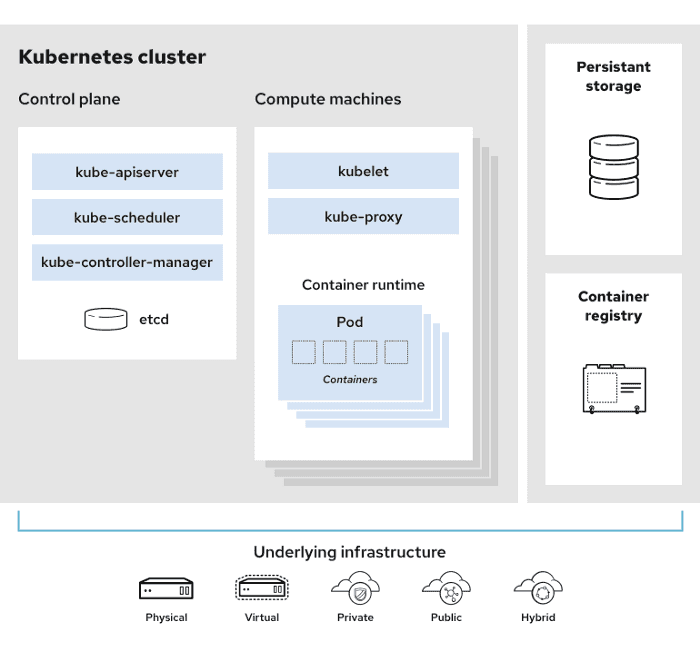

- Packaging code in containers: Kubernetes itself does not actually run code - Kubernetes is the orchestration engine. Before reaching this stage, code first needs to be packaged in a container, and Docker is one such common container format.

- Pushing the container to registry for use: The Kubernetes cluster accesses the container image through the registry in order to deploy.

- Describing the job or a service: For each container, Kubernetes reads a manifest that describes how the container should be run. These manifests are written in YAML and encode configuration details, such as the number of instances of the container or how much memory should be allocated to the given container.

- Deploying containers in Kubernetes: A declarative setup contains all the relevant information about the desired state of the workload, which Kubenetes will then run and maintain. Kubernetes reads the manifest and runs the containers based on the specification.

Automation layer with Polyaxon

There are a number of steps involved in the process of getting a service running in Kubernetes, which might not be a big deal if this steps were not frequent. But data-science and machine learning teams repeat this process all the time. It makes sense to abstract and automate the process, and this is where the value of Polyaxon becomes clear; Polyaxon both can schedule pre-backed containers, can build containers on the fly, and can inject additional code, data, and artifacts during runtime while tracking the complete dependency chain. Additionally, Polyaxon comes with extra few features that provides fan-in and fan-out, hyperparameter tuning, graph and pipelines support, and running operations on schedule.