Overview

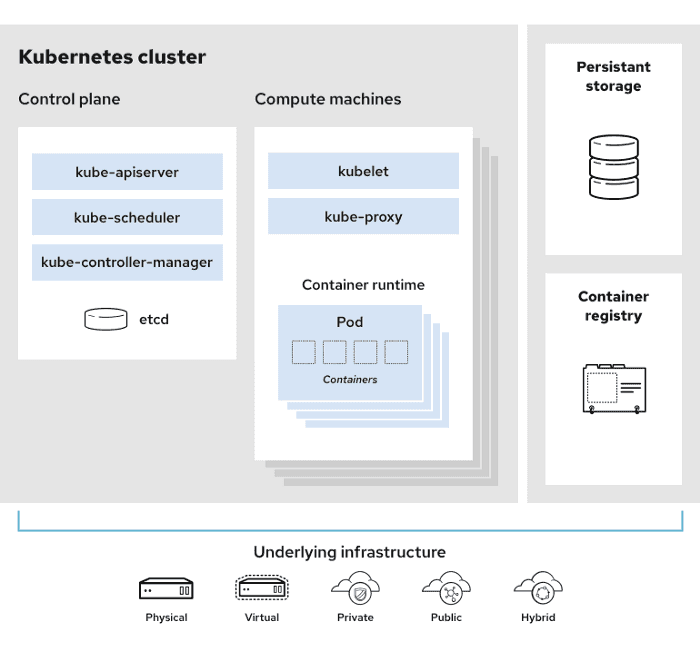

Using Kubernetes to manage data-science projects has vastly simplified containers management and deployment, and the adoption of serverless machine learning workload, yet it has introduced a set of new problems and added complexity related cluster management. Therefore, we need to understand the underlying architecture as well as common issues in order to speed up the Kubernetes troubleshooting process.

In this article we will look at:

- Why is Kubernetes troubleshooting difficult

- Common issues

- Common errors

- Interfaces exposed by Kubernetes to debug issues

- How Polyaxon simplifies debugging and monitoring

Why is Kubernetes troubleshooting difficult

Kubernetes is a large and complex system which makes troubleshooting issues that occur in the cluster very complicated, even in a small or local Kubernetes cluster. Additionally, Kubernetes is often used to build microservices applications, schedule containerized machine learning workload, or deploy versatile applications, in which each container is developed by a data-scientists, a machine learning engineer, or a separate team. This creates a lack of clarity about division of responsibility – if there is a problem with a pod, is that a DevOps problem, or something to be resolved by the relevant dev, ML, or application team?

The difficulty of diagnosing, identifying, and resolving issues comes from the fact that a problem can be the result of

- Low level of visibility and a large number of moving parts

- A new configuration made the Ops team

- An upgrade with backwards-incompatible changes

- An individual container made by a developer

- An issue in one or more pods

- A controller

- The control plane component

- Or a combination of more than one of these

In short, Kubernetes troubleshooting can quickly become a mess, waste major resources, and impact users and application functionality, unless teams closely coordinate and have the right tools available.

Common Kubernetes Configuration Issues

While standing, upgrading, or provisioning a Kubernetes cluster, you will encounter several issues., some of this issues are very hard to solve due to an architectural decision, for example using a file system or a blob storage technology, or incompatibility wth the underlying networking solution.

In this section, we will try to categorize the different issue types and narrow down the troubleshooting scope for data-science and machine learning teams.

Network Connectivity Issues

Polyaxon deploys with a gateway that handles the traffic from and to the API. The gateway can be configured with a load balancer or an ingress. Not being able to access the API can be verified easily, the issue can be related to a misconfiguration of the external networking resource, the ingress definition, or because of a missing proxy configuration in case of a firewall.

Furthermore, Polyaxon relies on the internal networking capabilities to expose services and talk with Kubernetes API. Several issues related to the DNS configuration can prevent the correct functioning of Polyaxon.

Pod Configuration Issues

One of the most common issues faced by Kubernetes admins is Pod configuration issues. These issues can range from wrong manifests or to a deprecated API. However, they are also the simplest to diagnose as Kubernetes provides clear error messages indicating the root cause of an issue.

Polyaxon will prevent this type of errors because it exposes a cleaner and a typed interface and handles producing valid Kubernetes manifests or raise errors with faster feedback loop.

Furthermore, Polyaxon provides commands and UI features to easily figure out this type of issues using:

- Polyaxon UI: by looking at the status page or by using the inspection feature.

- Polyaxon CLI:

polyaxon ops statusesandpolyaxon ops inspect

Node Related Issues

These issues occur when the worker nodes are experiencing issues related to:

- Network

- Hardware failures

- Data loss

- Disc Space capacity

- Failures in provisioning

These node related issues generally requires someone with admin access to the cluster.

Common Kubernetes Errors

If you are using Kubernetes, you will inevitably encounter the following errors:

- CreateContainerConfigError

- ImagePullBackOff or ErrImagePull

- CrashLoopBackOff

- Kubernetes Node Not Ready

- OOMKilled

In this section we will provide a guide to identify and resolve these problems.

CreateContainerConfigError

This error is usually the result of a missing Secret or ConfigMap.

- Secrets: are Kubernetes objects used to store sensitive data such as a password, a token, a key, or database credentials.

- ConfigMaps: store non-confidential data in key-value pairs, and are typically used to hold configuration information.

This issue can happen if you mount a Secret or a ConfigMap manually and the underlying object does not exists on the Kubernetes cluster or the specific namespace.

You can easily identify this error by using:

- Polyaxon UI: by looking at the status page or by using the inspection feature.

- Polyaxon CLI:

polyaxon ops statusesandpolyaxon ops inspect

Alternatively, if you have access to the Kubernetes cluster and you prefer to check the issue using the kubectl,

you can run kubectl get pods and then describe the pod using kubectl describe [name].

To check that the Secret or ConfigMap does not exist, you can use:

kubectl get configmap [name]Or

kubectl get secret [name]If the result is null, the Secret or ConfigMap is missing, and you need to create it. Please check the Kubernetes documentation to learn how to create the Secrets or ConfigMaps.

ImagePullBackOff or ErrImagePull

This error happens when the pod fails to pull a container image from a registry. This is issue generates a warning status in Polyaxon, because the pod does not stop, but only refuses to start because it cannot create one or more containers defined in its manifest.

You can easily identify this error by using:

- Polyaxon UI: by looking at the status page or by using the inspection feature.

This error can happen for two reason:

- The registry is not accessible

- The image is not stored on the registry, i.e. wrong image name or image tag

CrashLoopBackOff

This issue indicates a pod cannot run correctly, it means that the pod is starting, crashing, starting again, and then crashing again.

This generally happens because:

- The logic running inside the container is wrong, and the best way to get more information is to check the logs.

- Bugs or not caught exceptions.

- A service that is required for the logic to work can’t be reached or the connection fails (database, backend, etc.).

Sometimes this error might happen because of errors in the manifest or pod configuration, such as:

- Trying to bind an already used port

- Wrong command arguments for the container

- Errors in liveness probes

- Volume mounting issue

OOMKilled

The OOMKilled error, also indicated by exit code 137, means that a container or pod was terminated because they used more memory than allowed. OOM stands for “Out Of Memory”.

Kubernetes Node Not Ready

When a worker node shuts down, crashes, or gets preempted, all pods that reside on it become unavailable, and the node status appears as NotReady.

Exit Codes

Pods terminated with an exit code:

Exit Codes 0: Purposely stopped, This is generally an indicator that the container was automatically stopped because of a known issue.Exit Codes 1: Application error, indicates that a container shut down, either because of an logic failure or because the image pointed to an invalid file.Exit Code 125: Container failed to run error, The docker run command did not execute successfully.Exit Code 126: Command invoke error, A command specified in the image specification could not be invoked.Exit Code 127: File or directory not found, File or directory specified in the image specification was not found.Exit Code 128: File or directory not found, File or directory specified in the image specification was not found.Exit Code 134: Abnormal termination (SIGABRT), The container aborted itself using the abort() function.Exit Code 137: Immediate termination (SIGKILL), Container was immediately terminated by the operating system via SIGKILL signalExit Code 139: Segmentation fault (SIGSEGV), Container attempted to access memory that was not assigned to it and was terminatedExit Code 143: Graceful termination (SIGTERM), Container received warning that it was about to be terminated, then terminatedExit Code 255: Exit Status Out Of Range, Container exited, returning an exit code outside the acceptable range, meaning the cause of the error is not known

Diagnosing and resolving Kubernetes issues

If you are using Polyaxon, the best way to diagnose your workload is by checking:

- The logs tab or

polyaxon ops logs - The statuses tab or

polyaxon ops statuses - The inspection feature or

polyaxon ops inspect

Additionally you can start a dummy pod using the same configuration with a debug command:

container:

command: ["/bin/bash", "-c"]

args: ["sleep 3600"]And then using the shell tab or polyaxon ops shell to run the code interactively.

Finally machine learning workload is a bit more complex than traditional workload, because errors sometimes go undetected using the traditional monitoring tools. Polyaxon, however has the tracking module that allows users to add instrumentation to their code to report progress about about their metrics, images, graphs, audios, videos, text, …