New hyperparameter tuning capabilities

Polyaxon v1 comes with a new and different approach to managing and monitoring hyperparameter tuning:

- It manages concurrency on-pull instead of on-push.

- It uses the same pipeline abstraction used by DAGs and Schedules to follow dependent runs.

- It provides a fully customizable interface.

- It creates suggestions in a serverless way which means no more long running service is required.

In Polyaxon v1.2, we introduced some new features that improve other aspects of running hyperparameter tuning:

- Customization.

- Debuggability.

- Search and comparison.

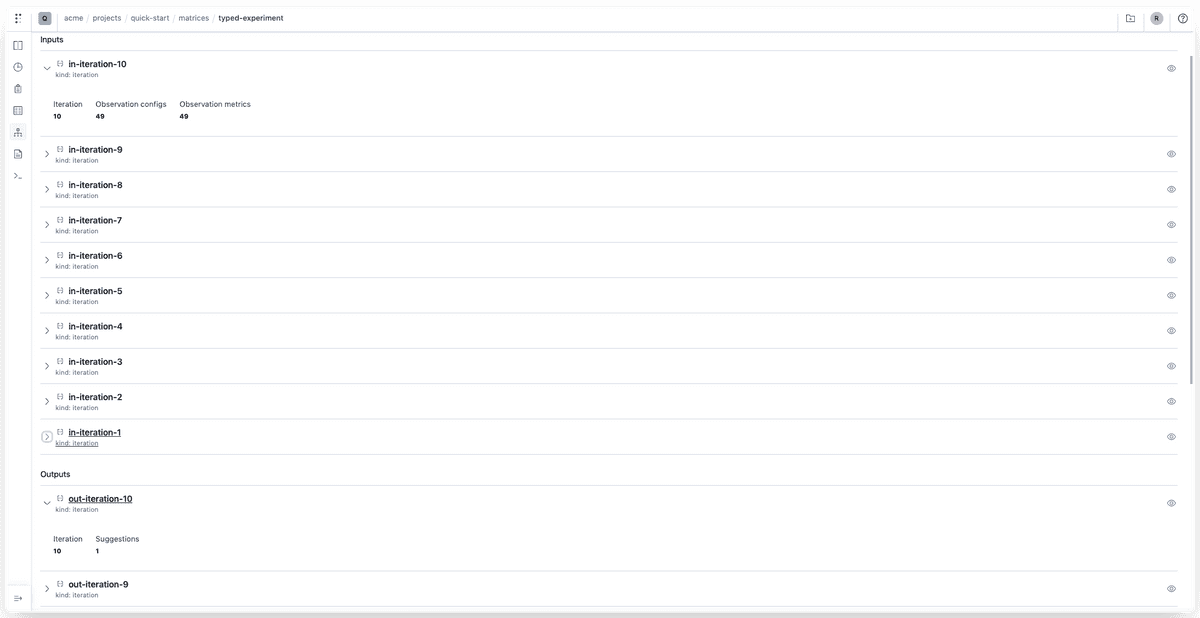

Iteration Lineage

The optimization algorithms provided by our platform are iterative. One of the issues we had we Polyaxon v0 was an easy way to debug or improve an optimization algorithm.

In Polyaxon v1.2 we introduced a new lineage artifact called iteration:

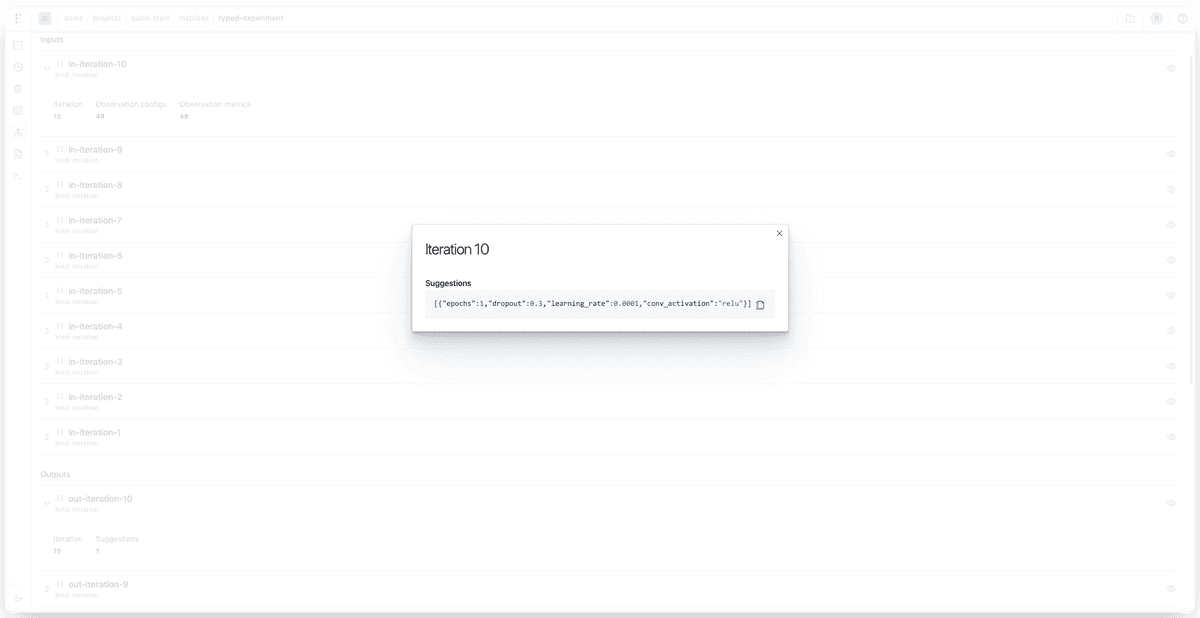

The artifacts lineage tab shows both iteration inputs and outputs.

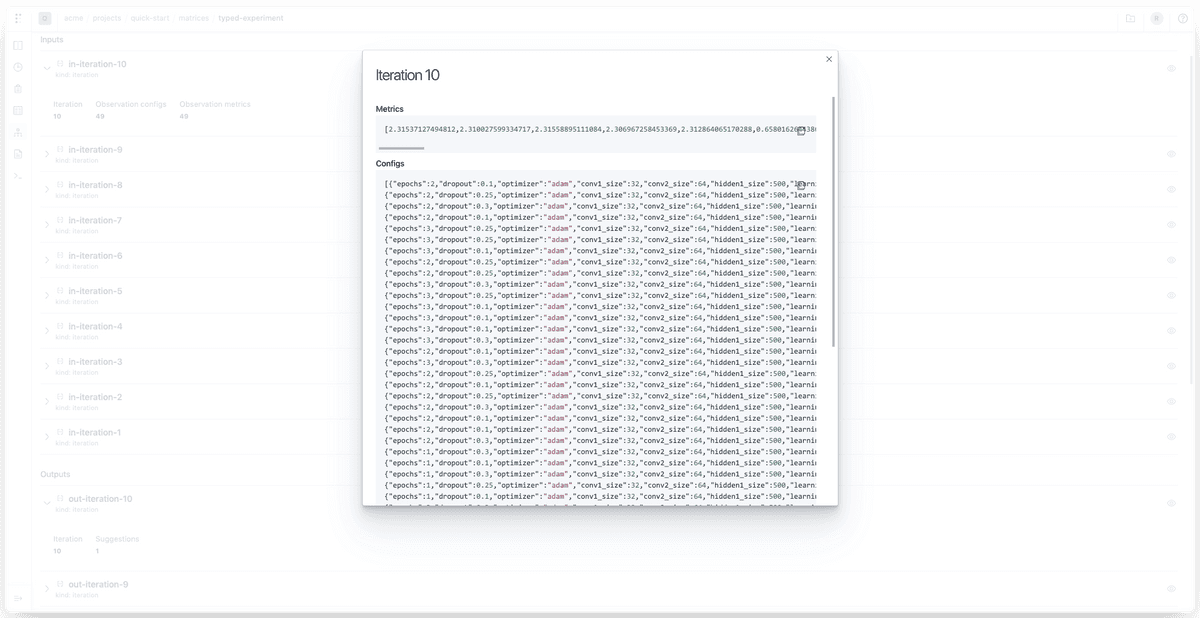

For instance, this bayesian optimization has several iterations, the last iteration will create suggestions based on 49 observations:

And will generate one new suggestion:

Using these lineage artifacts, users can copy the observation configs and the metrics and can iterate on them offline to improve the suggestion algorithm. It’s also useful in case of an error to reproduce the error on a local machine without requiring a full rerun of the optimization process.

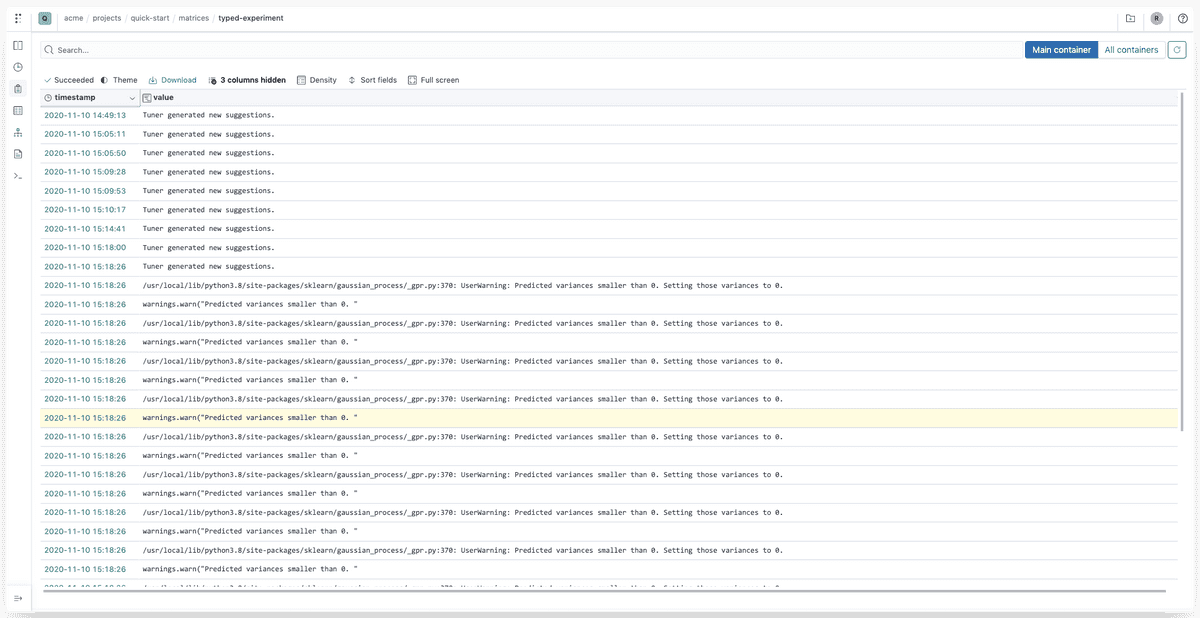

Polyaxon now stores the logs coming from the tuning containers which comes handy in case of an error:

Customization

Since it’s possible to run the optimization outside of a Polyaxon cluster based on the iteration lineage, users can improve the tuning process or use different algorithms. In addition to the generic iterative optimization process that Polyaxon exposes, it’s possible now to customize the tuners that Polyaxon uses, in other terms, users can replace the default Bayesian optimization, hyperband, … implementations with their logic simply by overriding the container section.

version: 1.1

kind: operation

matrix:

kind: bayes

container: NEW_CONTAINER_DEFINITION

...Midrun concurrency update

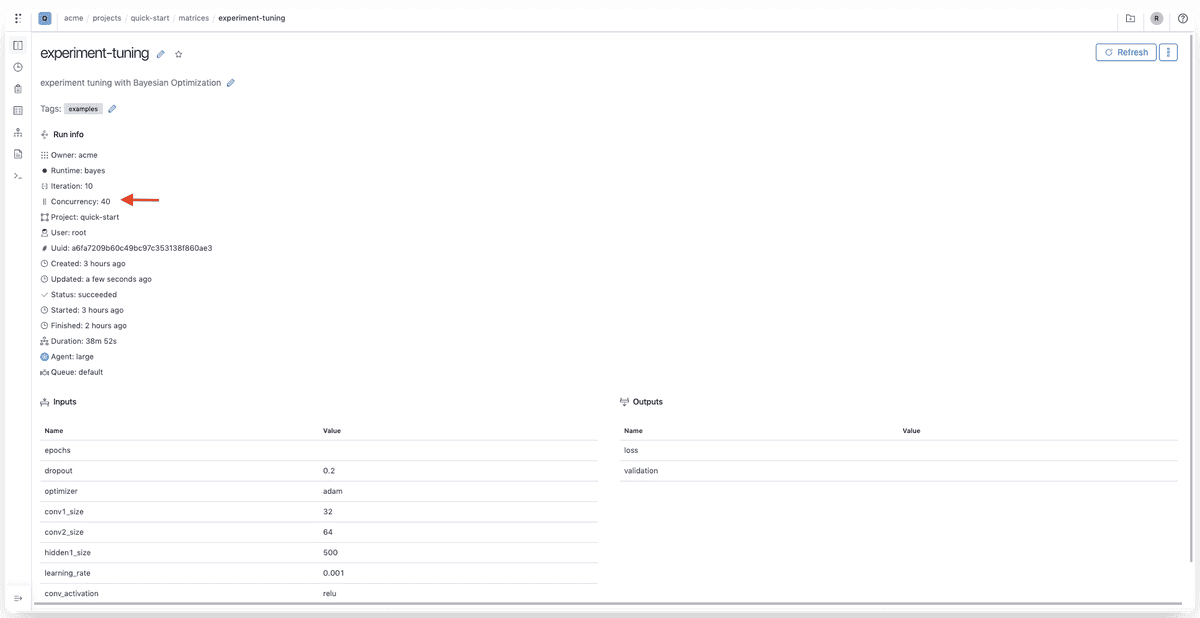

In v0 it was impossible to change the concurrency of a hyperparameter tuning once it’s scheduled. In v1 we introduced queues, where users can use to schedule their operations and can update the concurrency and priority of those queues, which would impact all runs and pipelines scheduled on those queues.

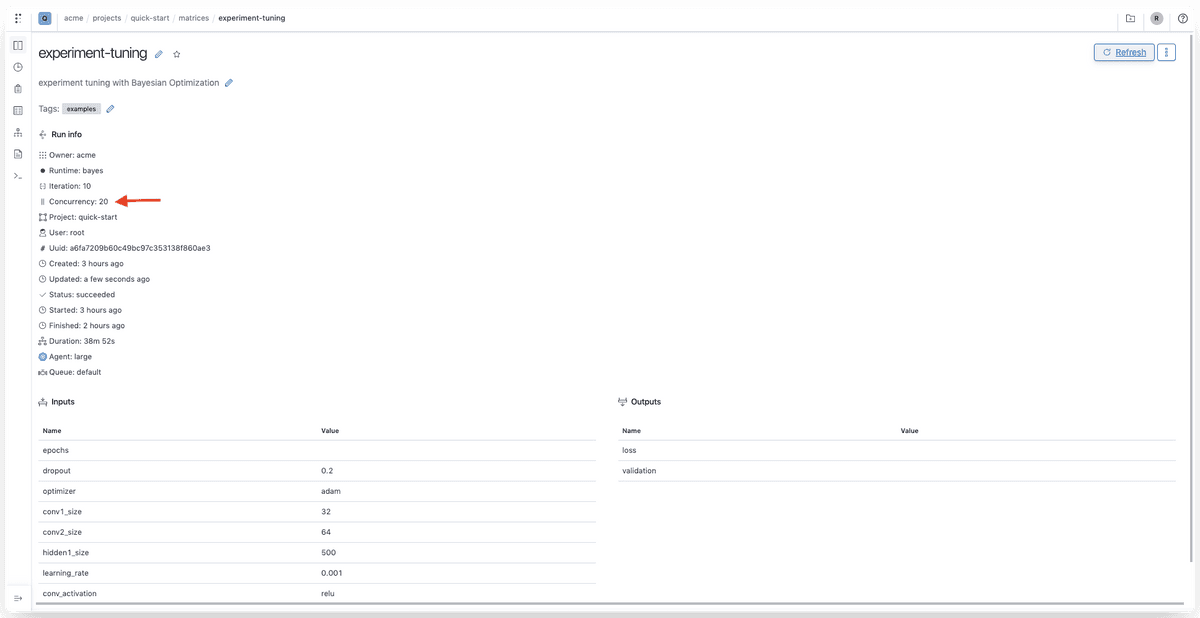

In v1.2, we allow users not only to update the concurrency on queues but also to update the concurrency on any pipeline, including hyperparameter tuning pipelines. This means that users will not need to update the queue concurrency, to not impact other runs and pipelines, and they can increase or decrease the concurrency on per pipeline or nested pipeline (Note that the concurrency of any pipeline is limited by the concurrency of the queue it runs on).

client.log_meta(concurrency=20)

Currently this feature is possible via the Client/API but we will expose a UI for this very soon.

Reproducing and rerunning

Since it’s possible to copy the iteration’s observations, metrics, and suggestions. Users can also paste the suggestions of any iteration into a mapping and run them in parallel without the need to rerun the full optimization process.

---

matrix:

kind: mapping

values: [...] # values copied from the lineage

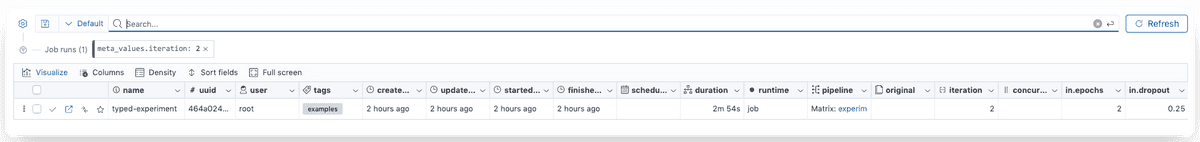

# Ref to the component to runIteration filters and comparison

Finally, it’s possible to filter runs per iteration and compare them or plot visualizations. It’s also possible to compare one iteration against another.

Learn More about Polyaxon

This blog post just goes over a couple of features that we shipped since our last product update, there are several other features and fixes that are worth checking. To learn more about all the features, fixes, and enhancements, please visit the release notes.

Polyaxon continues to grow quickly and keeps improving and providing the simplest machine learning abstraction. We hope that these updates will improve your workflows and increase your productivity, and again, thank you for your continued feedback and support.