Joins

Until now, Polyaxon provided several interfaces for fanning out operations either with a list of parameters using Mapping or based on a hyperparameter tuning algorithm supported by the Matrix section.

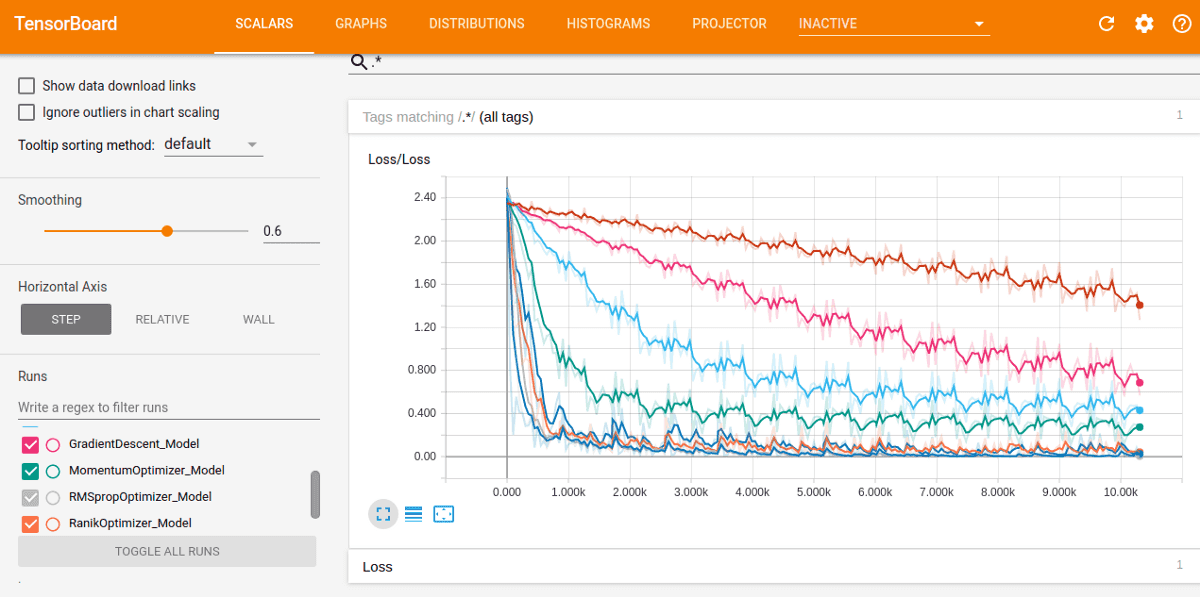

The Join interface is a new abstraction that allows performing fan-in operations. A typical use-case for such interface is the map-reduce pattern, but it’s also the interface used by Polyaxon to provide performance-based Tensorboards, i.e. starting a Tensorboard based on a search: {query: metrics.loss:< 0.01, sort: metrics.loss, limit: 10}.

Polyaxon Join is not a replacement to other map-reduce frameworks, rather it provides a very convenient way to collect all; Inputs, Outputs, Lineages, Contexts, Artifacts from upstream runs based on Polyaxon Query Language.

A Join can be used both in an independent operation or in the context of a DAG. And each operation can perform one or multiple joins.

Let’s look at some concrete examples.

Performance-based Tensorboard

A performance-based Tensorboard operation allows starting a Tensorboard, dynamically, based on some criteria without prior knowledge of the runs’ ids.

version: 1.1

kind: operation

name: compare-top-experiments

joins:

- query: 'metrics.loss:<0.01'

sort: 'metrics.loss'

limit: '5'

params:

tensorboards:

value: { dirs: '{{ artifacts.tensorboard }}' }

component:

inputs:

- { name: tensorboards, type: artifacts, toInit: true }

run:

kind: service

ports:

- 6006

container:

image: tensorflow/tensorflow:2.2.0

command:

- tensorboard

args:

- '--logdir={{globals.artifacts_path}}'

- '--port={{globals.ports[0]}}'

- '--path_prefix={{globals.base_url}}'

- '--host=0.0.0.0'In this example, Polyaxon will automatically perform a search and collect artifacts logged under the name tensorboard.

Note that using the artifacts prefix, Polyaxon will look in the lineage table, however, if you do not log the lineage using Polyaxon, you can still pass a subpath, e.g. sub-path/in/each/run/in/the/search.

Map-Reduce

Joins can be used as an automated process to perform fan-out -> fan-in or map-reduce process.

version: 1.1

kind: component

run:

kind: dag

operations:

- name: fan_out

hubRef: "my-component:v1"

matrix:

kind: random

numRuns: 20

params:

learning_rate:

kind: linspace

value: 0.001:0.1:5

dropout:

kind: choice

value: [0.25, 0.3]

conv_activation:

kind: pchoice

value: [[relu, 0.1], [sigmoid, 0.8]]

epochs:

kind: choice

value: [5, 10]

- name: fan_in

params:

matrix_uuid:

ref: ops.fan_out

value: globals.uuid

contextOnly: true

joins:

- query: "metrics.accuracy:>0.9, pipeline:{{ matrix_uuid }}"

sort: "-metrics.accuracy"

params:

uuids: {value: "globals.uuid", contextOnly: true}

learning_rates: {value: "inputs.learning_rate", contextOnly: true}

accuracies: {value: "outputs.accuracy", contextOnly: true}

losses: {value: "outputs.loss", contextOnly: true}

component:

run:

kind: job

container:

image: image

command: ["/bin/bash", "-c"]

args: [echo {{ uuids }}; "echo {{ learning_rates }}; "echo {{ accuracies }}; echo {{ losses }}"]In the example above, instead of searching the complete project, we restrict the search to a specific subset defined by the pipeline managing the random search algorithm (the same logic can be used for Mapping, grid search, Bayesian optimization, …).

In this example, the reduce operation is not doing anything important, just printing some of the inputs and outputs collected.

Learn More about Polyaxon

This blog post just goes over a couple of features that we shipped since our last product update, there are several other features and fixes that are worth checking. To learn more about all the features, fixes, and enhancements, please visit the release notes.

Polyaxon continues to grow quickly and keeps improving and providing the simplest machine learning abstraction. We hope that these updates will improve your workflows and increase your productivity, and again, thank you for your continued feedback and support.